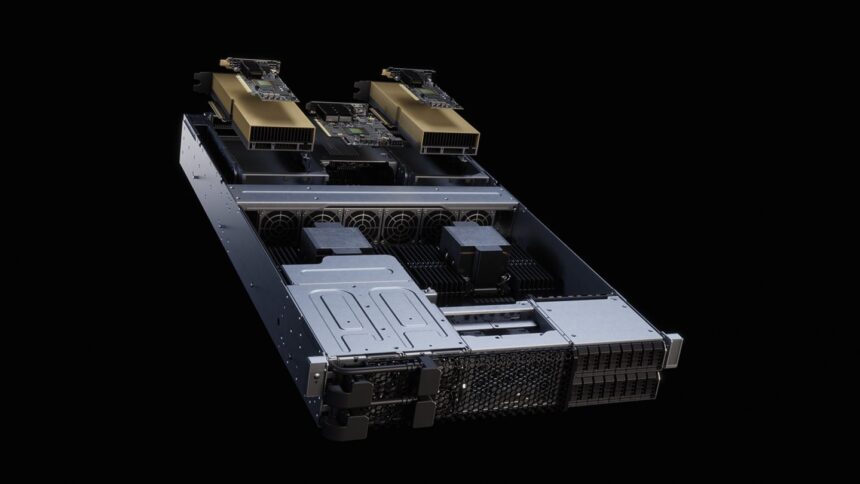

NVIDIA’s newest RTX PRO 6000 Blackwell Server Version GPU will quickly be obtainable in enterprise servers.

Programs from Cisco, Dell Applied sciences, HPE, Lenovo, and Supermicro will ship numerous configurations in 2U servers. Nvidia says they are going to supply increased efficiency and effectivity for AI, graphics, simulation, analytics, and industrial functions, and help duties like AI mannequin coaching, content material creation, and scientific analysis.

“AI is reinventing computing for the primary time in 60 years – what began within the cloud is now reworking the structure of on-premises knowledge centres,” mentioned Jensen Huang, founder and CEO of NVIDIA. “With the world’s main server suppliers, we’re making NVIDIA Blackwell RTX PRO Servers the usual platform for enterprise and industrial AI.”

GPU acceleration for enterprise workloads

Thousands and thousands of servers are offered every year for enterprise operations, most nonetheless utilizing ‘conventional’ CPUs. The brand new RTX PRO Servers give programs GPU acceleration, boosting efficiency in analytics, simulations, video processing, and rendering, the corporate says. NVIDIA says its Server Version GPU can ship as much as 45 occasions higher efficiency than CPU-only programs, with 18 occasions increased power effectivity.

The RTX PRO line is geared toward firms constructing “AI factories” the place house, energy, and cooling could also be restricted. The servers additionally present the infrastructure for NVIDIA’s AI Knowledge Platform for storage programs. Dell, for instance, is updating its AI Knowledge Platform to make use of NVIDIA’s design; its PowerEdge R7725 servers comes with two RTX PRO 6000 GPUs, NVIDIA AI Enterprise software program, and NVIDIA networking.

The brand new 2U servers, which might home as much as eight GPU items, had been amongst these introduced in Might at COMPUTEX.

Blackwell structure options

The brand new servers are constructed round NVIDIA’s Blackwell structure, which incorporates:

- Fifth-generation Tensor Cores and a second-generation Transformer Engine with FP4 precision, able to operating inference at as much as six occasions quicker than the L40S GPU.

- Fourth-generation RTX know-how for photograph rendering, with as much as 4 occasions the efficiency of the L40S GPU.

- Virtualisation and NVIDIA Multi-Occasion GPU know-how, permitting 4 separate workloads per GPU.

- Improved power effectivity for decrease knowledge centre energy use.

For bodily AI and robotics

NVIDIA’s Omniverse libraries and Cosmos world basis fashions on RTX PRO Servers can run digital twin simulations, robotic coaching routines, and large-scale artificial knowledge creation. Additionally they help NVIDIA Metropolis blueprints for video search and summarisation and imaginative and prescient language fashions, amongst different instruments to be used in bodily environments.

NVIDIA’s has up to date its Omniverse and Cosmos choices, with new Omniverse SDKs and added compatibility with MuJoCo (MJCF) and Common Scene Description (OpenUSD). The corporate says this can permit over 250,000 MJCF builders to run robotic simulations on its platforms. New Omniverse NuRec libraries deliver ray-traced 3D Gaussian splatting for mannequin development from sensor knowledge, whereas the up to date Isaac Sim 5.0 and Isaac Lab 2.2 frameworks – obtainable on GitHub – add neural rendering and new OpenUSD-based schemas for robots and sensors.

NuRec rendering is already built-in into the CARLA autonomous automobile simulator and is being adopted by firms like Foretellix, which is utilizing it for producing artificial AV testing knowledge. Voxel51’s FiftyOne knowledge engine, utilized by automakers like Ford and Porsche, now helps NuRec. Boston Dynamics, Determine AI, Hexagon, and Amazon Gadgets & Providers are amongst these already adopting the libraries and frameworks.

Cosmos WFMs has been downloaded over two million occasions. The software program helps generate artificial coaching knowledge for robots utilizing textual content, picture, or video prompts. The brand new Cosmos Switch-2 mannequin quickens picture knowledge era from simulation scenes and spatial inputs like depth maps. Corporations like Lightwheel, Moon Surgical, and Skild AI are utilizing Cosmos have begun to provide coaching knowledge at scale utilizing Cosmos Switch-2.

NVIDIA has additionally launched Cosmos Purpose, a 7-billion-parameter imaginative and prescient language mannequin to assist robots and AI brokers mix prior information and understanding of physics. It could actually automate dataset curation, helps multi-step robotic job planning, and run video analytics programs. NVIDIA’s personal robotics and DRIVE groups use Cosmos Purpose for knowledge filtering and annotation, and Uber and Magna have deployed it in autonomous autos, site visitors monitoring, and industrial inspection programs.

AI brokers and large-scale deployments

RTX PRO Servers can run the newly-announced Llama Nemotron Tremendous mannequin. When operating with NVFP4 precision on a single RTX PRO 6000 GPU, they ship as much as thrice higher value efficiency than FP8 on NVIDIA’s H100 GPUs.

(Photograph by Nvidia)

See additionally: Nvidia reclaims title of most precious firm on AI momentum

Need to study extra about AI and large knowledge from trade leaders? Try AI & Big Data Expo happening in Amsterdam, California, and London. The great occasion is co-located with different main occasions together with Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Discover different upcoming enterprise know-how occasions and webinars powered by TechForge here.