As graphics processing units (GPUs) have develop into important to coaching and working AI workloads, a rising variety of cloud service suppliers at the moment are providing cloud GPU cases — that means cloud servers outfitted with GPUs. That is excellent news for organizations searching for to keep away from the expense and complexity of deploying GPUs inside their very own {hardware}.

But, given the big choice of GPU cases now obtainable, determining which one most closely fits a specific workload is usually a problem. To supply steerage, this text unpacks the varieties of GPU cases obtainable in at the moment’s clouds and the professionals and cons of the assorted choices.

What Is a Cloud GPU Occasion?

A cloud GPU occasion is a cloud server outfitted with a GPU.

Companies can “hire” cloud GPU cases in the identical method that they’ll entry every other sort of cloud-based infrastructure-as-a-service (IaaS) useful resource: They choose the occasion they need from a cloud supplier, launch it, and hook up with it remotely.

Cloud GPU cases enable organizations to entry GPUs — whose huge parallel processing energy is effective when coaching and deploying AI fashions — with out having to buy costly GPU hardware outright or fear about establishing and sustaining it.

Platforms that provide cloud GPUs are generally known as GPU-as-a-service suppliers — though technically, not all GPU-as-a-service affords are cloud GPU cases as a result of some (like GPU-over-IP choices) present entry solely to GPUs, not complete cloud servers outfitted with GPUs.

Kinds of Cloud GPU Situations

GPU-enabled cloud server cases could be categorized in numerous methods:

1. Hyperscale vs. specialised cloud suppliers

GPU cases can be found from the big hyperscale cloud suppliers, like Amazon Internet Providers (AWS), Microsoft Azure, and Google Cloud Platform (GCP). On the similar time, a rising variety of smaller cloud distributors specializing in GPU-enabled servers, like Lambda Labs and CoreWeave, are getting into the market.

2. Basic-purpose vs. specialised cases

Some GPU cloud servers are configured to help a broad number of workloads that may profit from GPUs. Others goal particular use instances, like training AI models or working fashions after they’re educated.

Often, the distinction between server varieties boils right down to the kind of GPU contained in the server, though different sources (like the quantity of reminiscence obtainable on the server) will also be an element.

3. Shared vs. devoted servers

In some instances, GPU-enabled cloud servers are shared with different customers. This implies a number of firms can run workloads on the identical server. In different instances — that are normally labeled “dedicated” or “bare-metal” GPU cases — every buyer will get sole entry to a server. The latter options are normally dearer, however they may end up in higher efficiency as a result of a number of workloads will not be competing for a similar sources.

Select a Cloud GPU

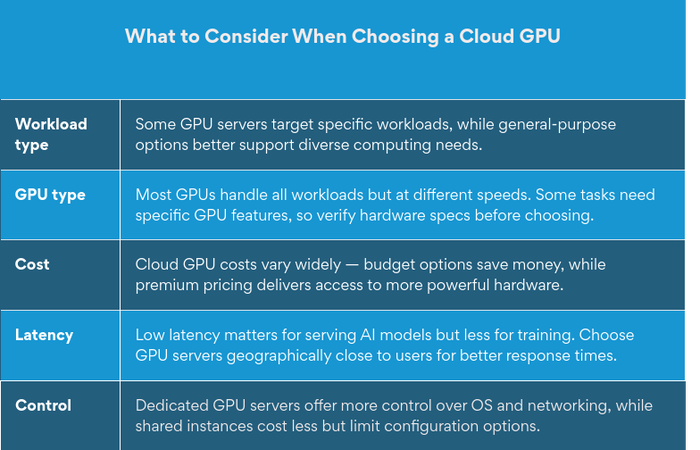

To resolve which cloud GPU server is greatest on your wants, take into account components like the next:

-

Workload sort: As talked about above, some cloud GPU servers are optimized for particular varieties of workloads, making them interesting if that you must run these varieties of workloads. If that you must help a number of varieties of workloads, take into account a general-purpose cloud GPU.

-

GPU sort: Basically, all GPU fashions can help all workloads that require GPUs. The distinction lies in how briskly they’re going to be. That mentioned, sure varieties of workloads could require {hardware} options which are solely obtainable on sure GPUs; if that is the case, you should definitely decide precisely which sort of GPU a cloud server affords earlier than committing to it.

-

Value: The price of cloud GPUs varies extensively. If you wish to reduce your spend, take into account a GPU occasion that’s optimized for value. If efficiency is your high precedence, you may seemingly discover that the extra you pay, the extra entry you get to essentially the most highly effective GPUs.

-

Latency: Latency (that means the velocity at which knowledge strikes over the community) is normally necessary for some workloads that profit from GPUs, like serving AI fashions (the place the responsiveness of a mannequin to customers hinges on having minimal GPU). It is much less necessary for others, like mannequin coaching (the place community delays will not be usually a problem). If that you must reduce latency, select a cloud GPU server positioned as shut as potential to customers or sources with which it would work together.

-

Management: Whereas all cloud GPU servers present entry to {hardware} outfitted with GPUs, the extent of management obtainable to customers varies. You will usually get most management from devoted server cases obtainable from specialised cloud GPU suppliers; shared GPU servers on hyperscale cloud platforms are normally cheaper however do not provide as many choices in areas corresponding to working system and networking configuration.

The place to Discover Cloud GPUs

As soon as you recognize which sort of cloud GPU occasion you need, you may must find a cloud supplier that provides it.

Some GPU distributors, like NVIDIA, provide central portals that may join companies to a number of cloud suppliers providing GPU-enabled servers. The catch, after all, is that they hyperlink solely to cloud companions inside their ecosystems and to ones that provide their {hardware}.

In case you select to not find a cloud GPU occasion by way of one in all these hubs, you’ll be able to hook up with cloud suppliers immediately. All the main hyperscalers — AWS, Azure, GCP, IBM, and Alibaba — provide GPU-enabled servers. You can even discover choices from clouds specializing in GPUs, corresponding to Lambda Labs, CoreWeave, Runpod, Huge.ai, and Paperspace (now a part of DigitalOcean).