A expertise that may acknowledge human feelings in actual time has been developed by Professor Jiyun Kim and his analysis crew within the Division of Materials Science and Engineering at UNIST. This revolutionary expertise is poised to revolutionize numerous industries, together with next-generation wearable programs that present companies based mostly on feelings.

Understanding and precisely extracting emotional info has lengthy been a problem as a result of summary and ambiguous nature of human impacts comparable to feelings, moods, and emotions. To deal with this, the analysis crew has developed a multi-modal human emotion recognition system that mixes verbal and non-verbal expression knowledge to effectively make the most of complete emotional info.

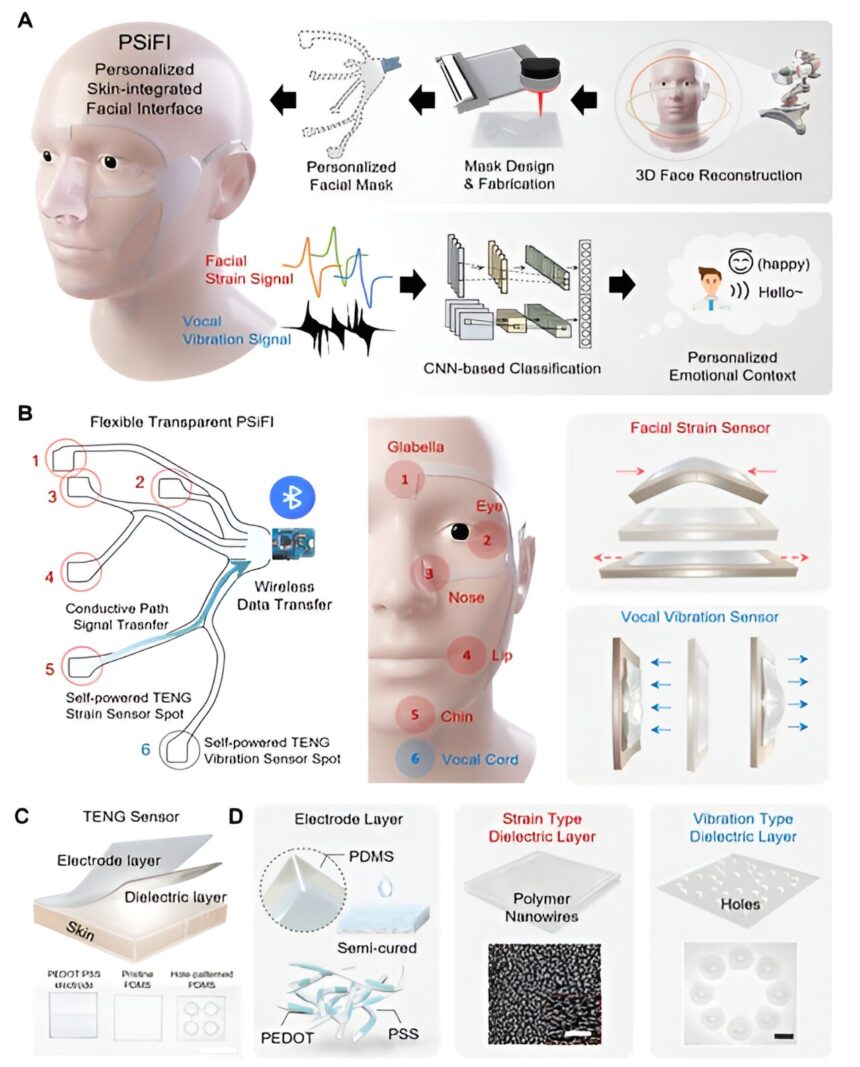

On the core of this technique is the personalised skin-integrated facial interface (PSiFI) system, which is self-powered, facile, stretchable, and clear. It encompasses a first-of-its-kind bidirectional triboelectric pressure and vibration sensor that allows the simultaneous sensing and integration of verbal and non-verbal expression knowledge. The system is totally built-in with a knowledge processing circuit for wi-fi knowledge switch, enabling real-time emotion recognition.

Using machine studying algorithms, the developed expertise demonstrates correct and real-time human emotion recognition duties, even when people are sporting masks. The system has additionally been efficiently utilized in a digital concierge software inside a digital actuality (VR) surroundings.

The expertise is predicated on the phenomenon of “friction charging,” the place objects separate into optimistic and unfavorable costs upon friction. Notably, the system is self-generating, requiring no exterior energy supply or complicated measuring units for knowledge recognition.

Professor Kim commented, “Based mostly on these applied sciences, we now have developed a skin-integrated face interface (PSiFI) system that may be personalized for people.” The crew utilized a semi-curing approach to fabricate a clear conductor for the friction charging electrodes. Moreover, a personalised masks was created utilizing a multi-angle capturing approach, combining flexibility, elasticity, and transparency.

The analysis crew efficiently built-in the detection of facial muscle deformation and vocal twine vibrations, enabling real-time emotion recognition. The system’s capabilities had been demonstrated in a digital actuality “digital concierge” software, the place personalized companies based mostly on customers’ feelings had been supplied.

Jin Pyo Lee, the primary writer of the examine, acknowledged, “With this developed system, it’s doable to implement real-time emotion recognition with just some studying steps and with out complicated measurement tools. This opens up potentialities for moveable emotion recognition units and next-generation emotion-based digital platform companies sooner or later.”

The analysis crew performed real-time emotion recognition experiments, amassing multimodal knowledge comparable to facial muscle deformation and voice. The system exhibited excessive emotional recognition accuracy with minimal coaching. Its wi-fi and customizable nature ensures wearability and comfort.

Moreover, the crew utilized the system to VR environments, using it as a “digital concierge” for numerous settings, together with good properties, personal film theaters, and good workplaces. The system’s skill to determine particular person feelings in numerous conditions permits the supply of personalised suggestions for music, motion pictures, and books.

Professor Kim emphasised, “For efficient interplay between people and machines, human-machine interface (HMI) units should be able to amassing numerous knowledge varieties and dealing with complicated built-in info. This examine exemplifies the potential of utilizing feelings, that are complicated types of human info, in next-generation wearable programs.”

The analysis is published within the journal Nature Communications.

Extra info:

Jin Pyo Lee et al, Encoding of multi-modal emotional info by way of personalised skin-integrated wi-fi facial interface, Nature Communications (2024). DOI: 10.1038/s41467-023-44673-2

Quotation:

World’s first real-time wearable human emotion recognition expertise developed (2024, February 22)

retrieved 24 February 2024

from https://techxplore.com/information/2024-02-world-real-wearable-human-emotion.html

This doc is topic to copyright. Other than any honest dealing for the aim of personal examine or analysis, no

half could also be reproduced with out the written permission. The content material is supplied for info functions solely.