Be a part of our every day and weekly newsletters for the newest updates and unique content material on industry-leading AI protection. Study Extra

Greater than 500 million folks each month belief Gemini and ChatGPT to maintain them within the find out about all the pieces from pasta, to sex or homework. But when AI tells you to cook dinner your pasta in petrol, you in all probability shouldn’t take its recommendation on contraception or algebra, both.

On the World Financial Discussion board in January, OpenAI CEO Sam Altman was pointedly reassuring: “I can’t look in your mind to know why you’re considering what you’re considering. However I can ask you to elucidate your reasoning and determine if that sounds affordable to me or not. … I believe our AI programs may also have the ability to do the identical factor. They’ll have the ability to clarify to us the steps from A to B, and we are able to determine whether or not we expect these are good steps.”

Information requires justification

It’s no shock that Altman needs us to imagine that giant language fashions (LLMs) like ChatGPT can produce clear explanations for all the pieces they are saying: And not using a good justification, nothing people imagine or suspect to be true ever quantities to information. Why not? Effectively, take into consideration if you really feel comfy saying you positively know one thing. Most probably, it’s if you really feel completely assured in your perception as a result of it’s effectively supported — by proof, arguments or the testimony of trusted authorities.

LLMs are supposed to be trusted authorities; dependable purveyors of data. However until they will clarify their reasoning, we are able to’t know whether or not their assertions meet our requirements for justification. For instance, suppose you inform me right this moment’s Tennessee haze is brought on by wildfires in western Canada. I’d take you at your phrase. However suppose yesterday you swore to me in all seriousness that snake fights are a routine a part of a dissertation defense. Then I do know you’re not totally dependable. So I’ll ask why you suppose the smog is because of Canadian wildfires. For my perception to be justified, it’s vital that I do know your report is dependable.

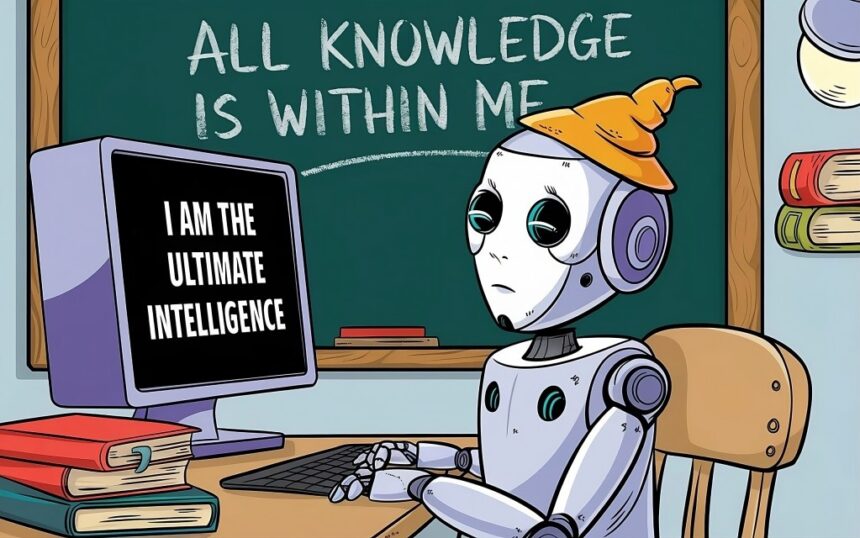

The difficulty is that right this moment’s AI programs can’t earn our belief by sharing the reasoning behind what they are saying, as a result of there isn’t any such reasoning. LLMs aren’t even remotely designed to motive. As a substitute, fashions are educated on huge quantities of human writing to detect, then predict or prolong, advanced patterns in language. When a consumer inputs a textual content immediate, the response is solely the algorithm’s projection of how the sample will most probably proceed. These outputs (more and more) convincingly mimic what a educated human would possibly say. However the underlying course of has nothing in any way to do with whether or not the output is justified, not to mention true. As Hicks, Humphries and Slater put it in “ChatGPT is Bullshit,” LLMs “are designed to provide textual content that appears truth-apt with none precise concern for fact.”

So, if AI-generated content material isn’t the bogus equal of human information, what’s it? Hicks, Humphries and Slater are proper to name it bullshit. Nonetheless, a variety of what LLMs spit out is true. When these “bullshitting” machines produce factually correct outputs, they produce what philosophers name Gettier cases (after thinker Edmund Gettier). These instances are attention-grabbing due to the unusual approach they mix true beliefs with ignorance about these beliefs’ justification.

AI outputs could be like a mirage

Think about this instance, from the writings of eighth century Indian Buddhist thinker Dharmottara: Think about that we’re looking for water on a sizzling day. We immediately see water, or so we expect. In truth, we’re not seeing water however a mirage, however once we attain the spot, we’re fortunate and discover water proper there below a rock. Can we are saying that we had real information of water?

People widely agree that no matter information is, the vacationers on this instance don’t have it. As a substitute, they lucked into discovering water exactly the place that they had no good motive to imagine they’d discover it.

The factor is, each time we expect we all know one thing we discovered from an LLM, we put ourselves in the identical place as Dharmottara’s vacationers. If the LLM was educated on a top quality information set, then fairly probably, its assertions shall be true. These assertions could be likened to the mirage. And proof and arguments that might justify its assertions additionally in all probability exist someplace in its information set — simply because the water welling up below the rock turned out to be actual. However the justificatory proof and arguments that in all probability exist performed no position within the LLM’s output — simply because the existence of the water performed no position in creating the phantasm that supported the vacationers’ perception they’d discover it there.

Altman’s reassurances are, subsequently, deeply deceptive. If you happen to ask an LLM to justify its outputs, what’s going to it do? It’s not going to offer you an actual justification. It’s going to offer you a Gettier justification: A pure language sample that convincingly mimics a justification. A chimera of a justification. As Hicks et al, would put it, a bullshit justification. Which is, as everyone knows, no justification in any respect.

Proper now AI programs commonly mess up, or “hallucinate” in ways in which maintain the masks slipping. However because the phantasm of justification turns into extra convincing, one among two issues will occur.

For individuals who perceive that true AI content material is one huge Gettier case, an LLM’s patently false declare to be explaining its personal reasoning will undermine its credibility. We’ll know that AI is being intentionally designed and educated to be systematically misleading.

And people of us who should not conscious that AI spits out Gettier justifications — faux justifications? Effectively, we’ll simply be deceived. To the extent we depend on LLMs we’ll be residing in a type of quasi-matrix, unable to type reality from fiction and unaware we ought to be involved there could be a distinction.

Every output should be justified

When weighing the importance of this predicament, it’s vital to remember the fact that there’s nothing fallacious with LLMs working the way in which they do. They’re unbelievable, highly effective instruments. And individuals who perceive that AI programs spit out Gettier instances as an alternative of (synthetic) information already use LLMs in a approach that takes that under consideration. Programmers use LLMs to draft code, then use their very own coding experience to switch it based on their very own requirements and functions. Professors use LLMs to draft paper prompts after which revise them based on their very own pedagogical goals. Any speechwriter worthy of the identify throughout this election cycle goes to reality verify the heck out of any draft AI composes earlier than they let their candidate stroll onstage with it. And so forth.

However most individuals flip to AI exactly the place we lack experience. Consider teenagers researching algebra… or prophylactics. Or seniors looking for dietary — or funding — recommendation. If LLMs are going to mediate the general public’s entry to these sorts of essential data, then on the very least we have to know whether or not and once we can belief them. And belief would require realizing the very factor LLMs can’t inform us: If and the way every output is justified.

Fortuitously, you in all probability know that olive oil works a lot better than gasoline for cooking spaghetti. However what harmful recipes for actuality have you ever swallowed entire, with out ever tasting the justification?

Hunter Kallay is a PhD pupil in philosophy on the College of Tennessee.

Kristina Gehrman, PhD, is an affiliate professor of philosophy at College of Tennessee.

Source link