When AI knowledge centres run out of house, they face a expensive dilemma: construct greater amenities or discover methods to make a number of areas work collectively seamlessly. NVIDIA’s newest Spectrum-XGS Ethernet expertise guarantees to unravel this problem by connecting AI knowledge centres throughout huge distances into what the corporate calls “giga-scale AI super-factories.”

Announced forward of Scorching Chips 2025, this networking innovation represents the corporate’s reply to a rising drawback that’s forcing the AI business to rethink how computational energy will get distributed.

The issue: When one constructing isn’t sufficient

As synthetic intelligence fashions turn out to be extra refined and demanding, they require monumental computational energy that usually exceeds what any single facility can present. Conventional AI knowledge centres face constraints in energy capability, bodily house, and cooling capabilities.

When corporations want extra processing energy, they usually should construct totally new amenities—however coordinating work between separate areas has been problematic resulting from networking limitations. The difficulty lies in commonplace Ethernet infrastructure, which suffers from excessive latency, unpredictable efficiency fluctuations (known as “jitter”), and inconsistent knowledge switch speeds when connecting distant areas.

These issues make it troublesome for AI techniques to effectively distribute complicated calculations throughout a number of websites.

NVIDIA’s answer: Scale-across expertise

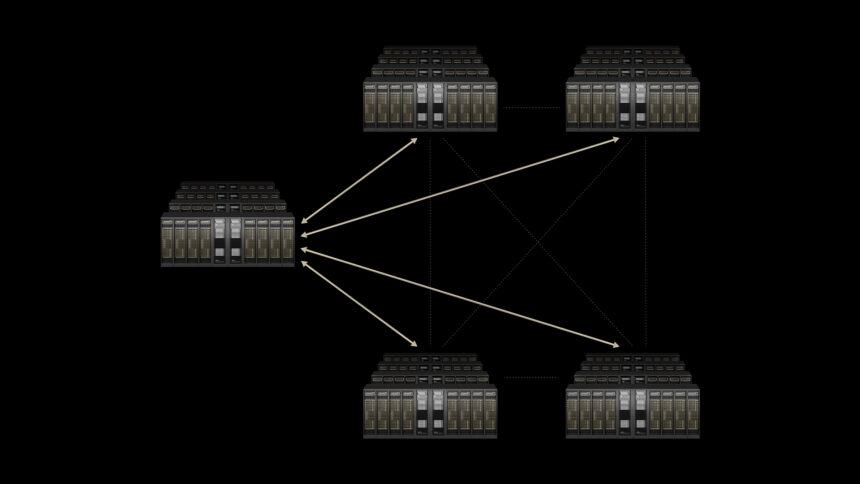

Spectrum-XGS Ethernet introduces what NVIDIA phrases “scale-across” functionality—a 3rd strategy to AI computing that enhances present “scale-up” (making particular person processors extra highly effective) and “scale-out” (including extra processors inside the similar location) methods.

The expertise integrates into NVIDIA’s present Spectrum-X Ethernet platform and consists of a number of key improvements:

- Distance-adaptive algorithms that mechanically alter community behaviour based mostly on the bodily distance between amenities

- Superior congestion management that forestalls knowledge bottlenecks throughout long-distance transmission

- Precision latency administration to make sure predictable response instances

- Finish-to-end telemetry for real-time community monitoring and optimisation

Based on NVIDIA’s announcement, these enhancements can “almost double the efficiency of the NVIDIA Collective Communications Library,” which handles communication between a number of graphics processing items (GPUs) and computing nodes.

Actual-world implementation

CoreWeave, a cloud infrastructure firm specialising in GPU-accelerated computing, plans to be among the many first adopters of Spectrum-XGS Ethernet.

“With NVIDIA Spectrum-XGS, we will join our knowledge centres right into a single, unified supercomputer, giving our clients entry to giga-scale AI that can speed up breakthroughs throughout each business,” stated Peter Salanki, CoreWeave’s cofounder and chief expertise officer.

This deployment will function a sensible check case for whether or not the expertise can ship on its guarantees in real-world circumstances.

Business context and implications

The announcement follows a sequence of networking-focused releases from NVIDIA, together with the unique Spectrum-X platform and Quantum-X silicon photonics switches. This sample suggests the corporate recognises networking infrastructure as a essential bottleneck in AI improvement.

“The AI industrial revolution is right here, and giant-scale AI factories are the important infrastructure,” stated Jensen Huang, NVIDIA’s founder and CEO, within the press launch. Whereas Huang’s characterisation displays NVIDIA’s advertising and marketing perspective, the underlying problem he describes—the necessity for extra computational capability—is acknowledged throughout the AI business.

The expertise might doubtlessly impression how AI knowledge centres are deliberate and operated. As an alternative of constructing large single amenities that pressure native energy grids and actual property markets, corporations may distribute their infrastructure throughout a number of smaller areas whereas sustaining efficiency ranges.

Technical issues and limitations

Nevertheless, a number of components might affect Spectrum-XGS Ethernet’s sensible effectiveness. Community efficiency throughout lengthy distances stays topic to bodily limitations, together with the velocity of sunshine and the standard of the underlying web infrastructure between areas. The expertise’s success will largely depend upon how effectively it will possibly work inside these constraints.

Moreover, the complexity of managing distributed AI knowledge centres extends past networking to incorporate knowledge synchronisation, fault tolerance, and regulatory compliance throughout totally different jurisdictions—challenges that networking enhancements alone can’t clear up.

Availability and market impression

NVIDIA states that Spectrum-XGS Ethernet is “out there now” as a part of the Spectrum-X platform, although pricing and particular deployment timelines haven’t been disclosed. The expertise’s adoption fee will probably depend upon cost-effectiveness in comparison with various approaches, akin to constructing bigger single-site amenities or utilizing present networking options.

The underside line for customers and companies is that this: if NVIDIA’s expertise works as promised, we might see quicker AI providers, extra highly effective functions, and doubtlessly decrease prices as corporations acquire effectivity by way of distributed computing. Nevertheless, if the expertise fails to ship in real-world circumstances, AI corporations will proceed dealing with the costly selection between constructing ever-larger single amenities or accepting efficiency compromises.

CoreWeave’s upcoming deployment will function the primary main check of whether or not connecting AI knowledge centres throughout distances can really work at scale. The outcomes will probably decide whether or not different corporations observe swimsuit or keep on with conventional approaches. For now, NVIDIA has offered an bold imaginative and prescient—however the AI business remains to be ready to see if the fact matches the promise.

See additionally: New Nvidia Blackwell chip for China might outpace H20 mannequin

Wish to study extra about AI and large knowledge from business leaders? Try AI & Big Data Expo happening in Amsterdam, California, and London. The great occasion is co-located with different main occasions together with Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Discover different upcoming enterprise expertise occasions and webinars powered by TechForge here.