Pc chips are a sizzling commodity. Nvidia is now one of the crucial worthwhile corporations on the planet, and the Taiwanese producer of Nvidia’s chips, TSMC, has been referred to as a geopolitical force. It ought to come as no shock, then, {that a} rising variety of {hardware} startups and established corporations wish to take a jewel or two from the crown.

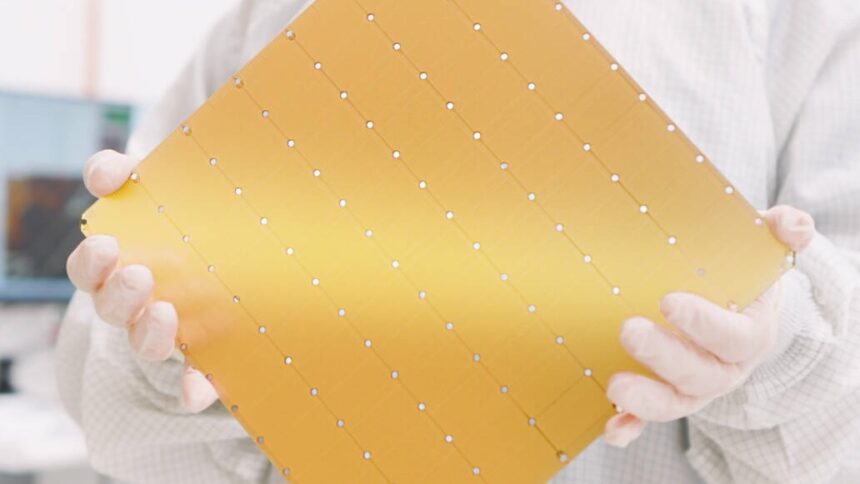

Of those, Cerebras is among the weirdest. The corporate makes laptop chips the dimensions of tortillas bristling with slightly below 1,000,000 processors, every linked to its personal native reminiscence. The processors are small however lightning fast as they don’t shuttle data to and from shared reminiscence positioned distant. And the connections between processors—which in most supercomputers require linking separate chips throughout room-sized machines—are fast too.

This implies the chips are stellar for particular duties. Current preprint research in two of those—one simulating molecules and the opposite coaching and operating massive language fashions—present the wafer-scale benefit may be formidable. The chips outperformed Frontier, the world’s high supercomputer, within the former. Additionally they confirmed a stripped down AI mannequin may use a 3rd of the standard vitality with out sacrificing efficiency.

Molecular Matrix

The supplies we make issues with are essential drivers of expertise. They usher in new potentialities by breaking previous limits in power or warmth resistance. Take fusion energy. If researchers could make it work, the expertise guarantees to be a brand new, clear supply of vitality. However liberating that vitality requires supplies to face up to excessive situations.

Scientists use supercomputers to mannequin how the metals lining fusion reactors may take care of the warmth. These simulations zoom in on particular person atoms and use the legal guidelines of physics to information their motions and interactions at grand scales. At this time’s supercomputers can mannequin supplies containing billions and even trillions of atoms with excessive precision.

However whereas the dimensions and high quality of those simulations has progressed so much through the years, their pace has stalled. Because of the method supercomputers are designed, they’ll solely mannequin so many interactions per second, and making the machines larger solely compounds the issue. This implies the entire size of molecular simulations has a tough sensible restrict.

Cerebras partnered with Sandia, Lawrence Livermore, and Los Alamos Nationwide Laboratories to see if a wafer-scale chip could speed things up.

The workforce assigned a single simulated atom to every processor. So they may rapidly change details about their place, movement, and vitality, the processors modeling atoms that might be bodily shut in the actual world had been neighbors on the chip too. Relying on their properties at any given time, atoms may hop between processors as they moved about.

The workforce modeled 800,000 atoms in three supplies—copper, tungsten, and tantalum—that is perhaps helpful in fusion reactors. The outcomes had been fairly beautiful, with simulations of tantalum yielding a 179-fold speedup over the Frontier supercomputer. Which means the chip may crunch a 12 months’s price of labor on a supercomputer into a number of days and considerably prolong the size of simulation from microseconds to milliseconds. It was additionally vastly extra environment friendly on the job.

“I’ve been working in atomistic simulation of supplies for greater than 20 years. Throughout that point, I’ve participated in large enhancements in each the dimensions and accuracy of the simulations. Nevertheless, regardless of all this, we now have been unable to extend the precise simulation price. The wall-clock time required to run simulations has barely budged within the final 15 years,” Aidan Thompson of Sandia Nationwide Laboratories said in a statement. “With the Cerebras Wafer-Scale Engine, we are able to hastily drive at hypersonic speeds.”

Though the chip will increase modeling pace, it could’t compete on scale. The variety of simulated atoms is restricted to the variety of processors on the chip. Subsequent steps embody assigning a number of atoms to every processor and utilizing new wafer-scale supercomputers that hyperlink 64 Cerebras systems together. The workforce estimates these machines may mannequin as many as 40 million tantalum atoms at speeds much like these within the research.

AI Mild

Whereas simulating the bodily world could possibly be a core competency for wafer-scale chips, they’ve at all times been centered on synthetic intelligence. The most recent AI fashions have grown exponentially, that means the vitality and price of coaching and operating them has exploded. Wafer-scale chips could possibly make AI extra environment friendly.

In a separate study, researchers from Neural Magic and Cerebras labored to shrink the dimensions of Meta’s 7-billion-parameter Llama language mannequin. To do that, they made what’s referred to as a “sparse” AI mannequin the place most of the algorithm’s parameters are set to zero. In concept, this implies they are often skipped, making the algorithm smaller, quicker, and extra environment friendly. However immediately’s main AI chips—referred to as graphics processing items (or GPUs)—read algorithms in chunks, that means they’ll’t skip each zeroed out parameter.

As a result of reminiscence is distributed throughout a wafer-scale chip, it can learn each parameter and skip zeroes wherever they happen. Even so, extraordinarily sparse fashions don’t often carry out in addition to dense fashions. However right here, the workforce discovered a method to get better misplaced efficiency with somewhat additional coaching. Their mannequin maintained efficiency—even with 70 % of the parameters zeroed out. Operating on a Cerebras chip, it sipped a meager 30 % of the vitality and ran in a 3rd of the time of the full-sized mannequin.

Wafer-Scale Wins?

Whereas all that is spectacular, Cerebras remains to be area of interest. Nvidia’s extra typical chips stay firmly in charge of the market. No less than for now, that seems unlikely to alter. Corporations have invested closely in experience and infrastructure constructed round Nvidia.

However wafer-scale might proceed to show itself in area of interest, however nonetheless essential, purposes in analysis. And it might be the method turns into extra widespread total. The power to make wafer-scale chips is just now being perfected. In a touch at what’s to come back for the sector as an entire, the largest chipmaker on the planet, TSMC, just lately mentioned it’s building out its wafer-scale capabilities. This might make the chips extra widespread and succesful.

For his or her half, the workforce behind the molecular modeling work say wafer-scale’s affect could possibly be extra dramatic. Like GPUs earlier than them, including wafer-scale chips to the supercomputing combine may yield some formidable machines sooner or later.

“Future work will give attention to extending the strong-scaling effectivity demonstrated right here to facility-level deployments, doubtlessly resulting in an excellent higher paradigm shift within the Top500 supercomputer record than that launched by the GPU revolution,” the workforce wrote of their paper.

Picture Credit score: Cerebras