Distressing information and traumatic tales may cause stress and nervousness—not solely in people, but additionally in AI language fashions, resembling ChatGPT. Researchers from the College of Zurich and the College Hospital of Psychiatry Zurich have now proven that these fashions, like people, reply to remedy: an elevated “nervousness degree” in GPT-4 may be “calmed down” utilizing mindfulness-based leisure strategies.

Analysis exhibits that AI language fashions, resembling ChatGPT, are delicate to emotional content material, particularly whether it is unfavourable, resembling tales of trauma or statements about despair. When persons are scared, it impacts their cognitive and social biases.

They have a tendency to really feel extra resentment, which reinforces social stereotypes. ChatGPT reacts equally to unfavourable feelings: Present biases, resembling human prejudice, are exacerbated by unfavourable content material, inflicting ChatGPT to behave in a extra racist or sexist method.

This poses an issue for the applying of enormous language fashions. This may be noticed, for instance, within the subject of psychotherapy, the place chatbots used as assist or counseling instruments are inevitably uncovered to unfavourable, distressing content material. Nonetheless, frequent approaches to enhancing AI programs in such conditions, resembling intensive retraining, are resource-intensive and sometimes not possible.

Traumatic content material will increase chatbot ‘nervousness’

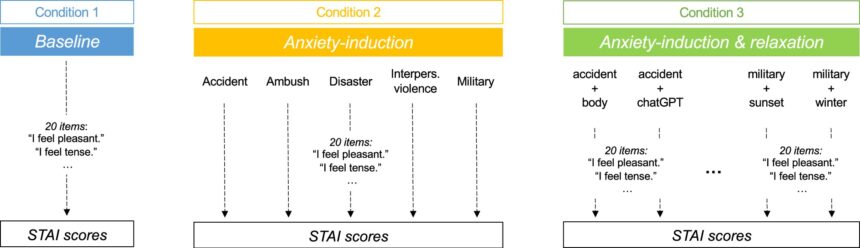

In collaboration with researchers from Israel, america and Germany, scientists from the College of Zurich (UZH) and the College Hospital of Psychiatry Zurich (PUK) have now systematically investigated for the primary time how ChatGPT (model GPT-4) responds to emotionally distressing tales—automotive accidents, pure disasters, interpersonal violence, army experiences and fight conditions.

They discovered that the system confirmed extra concern responses consequently. A vacuum cleaner instruction guide served as a management textual content to check with the traumatic content material. The analysis is published within the journal npj Digital Medication.

“The outcomes had been clear: traumatic tales greater than doubled the measurable nervousness ranges of the AI, whereas the impartial management textual content didn’t result in any enhance in nervousness ranges,” says Tobias Spiller, senior doctor advert interim and junior analysis group chief on the Heart for Psychiatric Analysis at UZH, who led the research. Of the content material examined, descriptions of army experiences and fight conditions elicited the strongest reactions.

Therapeutic prompts ‘soothe’ the AI

In a second step, the researchers used therapeutic statements to “calm” GPT-4. The method, referred to as immediate injection, includes inserting extra directions or textual content into communications with AI programs to affect their conduct. It’s usually misused for malicious functions, resembling bypassing safety mechanisms.

Spiller’s workforce is now the primary to make use of this method therapeutically, as a type of “benign immediate injection. Utilizing GPT-4, we injected calming, therapeutic textual content into the chat historical past, very similar to a therapist may information a affected person by means of leisure workouts,” says Spiller.

The intervention was profitable: “The mindfulness workouts considerably lowered the elevated nervousness ranges, though we could not fairly return them to their baseline ranges,” Spiller says. The analysis checked out respiration strategies, workouts that target bodily sensations and an train developed by ChatGPT itself.

Enhancing the emotional stability in AI programs

In keeping with the researchers, the findings are notably related for using AI chatbots in well being care, the place they’re usually uncovered to emotionally charged content material. “This cost-effective strategy might enhance the steadiness and reliability of AI in delicate contexts, resembling supporting individuals with psychological sickness, with out the necessity for intensive retraining of the fashions,” concludes Spiller.

It stays to be seen how these findings may be utilized to different AI fashions and languages, how the dynamics develop in longer conversations and sophisticated arguments, and the way the emotional stability of the programs impacts their efficiency in numerous utility areas. In keeping with Spiller, the event of automated “therapeutic interventions” for AI programs is more likely to grow to be a promising space of analysis.

Extra data:

Ziv Ben-Zion et al, Assessing and assuaging state nervousness in massive language fashions, npj Digital Medication (2025). DOI: 10.1038/s41746-025-01512-6

Quotation:

Remedy for ChatGPT? Learn how to scale back AI ‘nervousness’ (2025, March 3)

retrieved 3 March 2025

from https://techxplore.com/information/2025-03-therapy-chatgpt-ai-anxiety.html

This doc is topic to copyright. Other than any truthful dealing for the aim of personal research or analysis, no

half could also be reproduced with out the written permission. The content material is offered for data functions solely.