When private computer systems have been first invented, solely a small group of people that understood programming languages may use them. At present, anybody can search for the native climate, play their favourite music and even generate code with just some keystrokes.

This shift has basically modified how people work together with expertise, making highly effective computational instruments accessible to everybody. Now, developments in synthetic intelligence (AI) are extending this ease of interplay to the world of robotics via a platform referred to as Text2Robot.

Developed by engineers at Duke College, Text2Robot is a novel computational robotic design framework that permits anybody to design and construct a robotic just by typing a couple of phrases describing what it ought to appear like and the way it ought to perform. Its novel skills will probably be showcased on the upcoming IEEE Worldwide Convention on Robotics and Automation (ICRA 2025) going down Could 19–23, in Atlanta, Georgia.

Final yr, the undertaking received first place within the innovation class on the Digital Creatures Competitors that has been held for 10 years on the Synthetic Life convention in Copenhagen, Denmark. The group’s paper is available on the arXiv preprint server.

“Constructing a purposeful robotic has historically been a sluggish and costly course of requiring deep experience in engineering, AI and manufacturing,” stated Boyuan Chen, the Dickinson College Assistant Professor of Mechanical Engineering and Supplies Science, Electrical and Pc Engineering, and Pc Science at Duke College. “Text2Robot is taking the preliminary steps towards drastically bettering this course of by permitting customers to create purposeful robots utilizing nothing however pure language.”

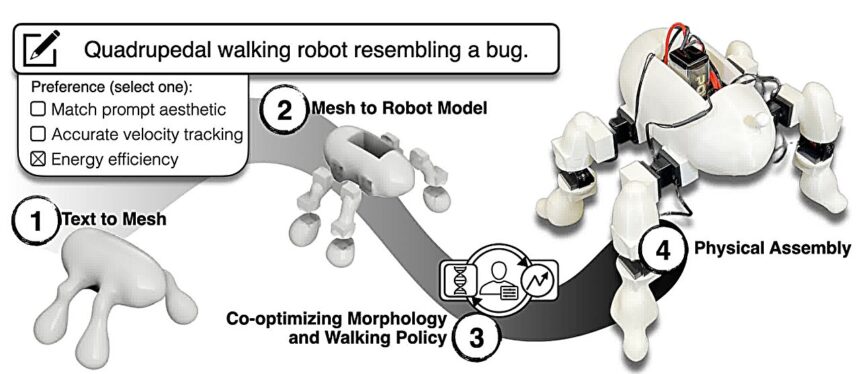

Text2Robot leverages rising AI applied sciences to transform consumer textual content descriptions into bodily robots. The method begins with a text-to-3D generative mannequin, which creates a 3D bodily design of the robotic’s physique based mostly on the consumer’s description.

This primary physique design is then transformed right into a transferring robotic mannequin able to finishing up duties by incorporating real-world manufacturing constraints, reminiscent of the position of digital elements and the performance and placement of joints.

The system makes use of evolutionary algorithms and reinforcement studying to co-optimize the robotic’s form, motion skills and management software program, guaranteeing it will probably carry out duties effectively and successfully.

“This is not nearly producing cool-looking robots,” stated Ryan Ringel, co-first creator of the paper and an undergraduate pupil in Chen’s laboratory. “The AI understands physics and biomechanics, producing designs which can be truly purposeful and environment friendly.”

For instance, if a consumer merely sorts a brief description reminiscent of “a frog robotic that tracks my velocity on command” or “an energy-efficient strolling robotic that appears like a canine,” Text2Robot generates a manufacturable robotic design that resembles the precise request inside minutes and has it strolling in a simulation inside an hour. In lower than a day, a consumer can 3D-print, assemble and watch their robotic come to life.

“This fast prototyping functionality opens up new prospects for robotic design and manufacturing, making it accessible to anybody with a pc, a 3D printer and an thought,” stated Zachary Charlick, co-first creator of the paper and an undergraduate pupil within the Chen lab. “The magic of Text2Robot lies in its capability to bridge the hole between creativeness and actuality.”

Text2Robot has the potential to revolutionize numerous features of our lives. Think about kids designing their very own robotic pets or artists creating interactive sculptures that may transfer and reply. At dwelling, robots could possibly be custom-designed to help with chores, reminiscent of a trash can that navigates a house’s particular structure and obstacles to empty itself on command. In outside environments, reminiscent of a catastrophe response situation, responders could want various kinds of robots that may full numerous duties below surprising environmental circumstances.

The framework at the moment focuses on quadrupedal robots, however future analysis will develop its capabilities to a broader vary of robotic types and combine automated meeting processes to additional streamline the design-to-reality pipeline.

“That is just the start,” stated Jiaxun Liu, co-first creator of the paper and a second-year Ph.D. pupil in Chen’s laboratory. “Our objective is to empower robots to not solely perceive and reply to human wants via their clever ‘mind,’ but in addition adapt their bodily kind and performance to finest meet these wants, providing a seamless integration of intelligence and bodily functionality.”

In the intervening time, the robots are restricted to primary duties like strolling by monitoring velocity instructions or strolling on tough terrains. However the group is wanting into including sensors and different devices into the platform’s skills, which might open the door to climbing stairs and avoiding dynamic obstacles.

“The way forward for robotics isn’t just about machines; it is about how people and machines collaborate to form our world,” added Chen. “By harnessing the facility of generative AI, this work brings us nearer to a future the place robots should not simply instruments however companions in creativity and innovation.”

Extra data:

Ryan P. Ringel et al, Text2Robot: Evolutionary Robotic Design from Textual content Descriptions, arXiv (2024). DOI: 10.48550/arxiv.2406.19963

Quotation:

Text2Robot platform leverages generative AI to design and ship purposeful robots with just some spoken phrases (2025, April 10)

retrieved 14 April 2025

from https://techxplore.com/information/2025-04-text2robot-platform-leverages-generative-ai.html

This doc is topic to copyright. Other than any truthful dealing for the aim of personal research or analysis, no

half could also be reproduced with out the written permission. The content material is supplied for data functions solely.