The ACHILLES project helps organisations translate the EU AI Act ideas into lighter, clearer, safer machine studying throughout domains.

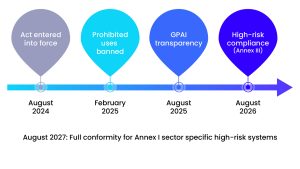

The EU’s AI Act is now in countdown mode. The primary bans and transparency duties took impact in early 2025 and August, respectively, and full obligations for high-risk programs are scheduled to reach in August 2026 (with a one-year delay for these in Annex I). ACHILLES, a €9m Horizon Europe mission launched final November, was shaped to assist innovators cross that compliance hole with out sacrificing efficiency or sustainability.

Our first article in The Innovation Platform Issue 21 sketched out the ambition: a human-centric, energy-efficient machine studying (ML) strategy guided by a shared authorized and moral ‘compass’. Eight months later, the multidisciplinary consortium is transferring from imaginative and prescient to execution:

- Two authorized and moral landmarks among the many deliverables: D4.1 Authorized & Moral Mapping and D4.4 Ethics Tips, turning a whole bunch of authorized pages into useful and actionable suggestions.

- After a spherical of technical necessities gathering and a authorized workshop, the 4 pilot use instances are refining their downside statements and analysis frameworks.

- The ACHILLES Built-in Growth Surroundings (IDE) is beginning to take form, promising simpler and accountable AI growth with clear documentation, essential for compliance and auditing workflows.

Actual-world use instances

ACHILLES is being validated by 4 pilots spanning healthcare, safety, creativity, and high quality administration.

Montaser Awal, Director of Analysis at IDnow, mentioned: “ACHILLES represents an enormous step ahead in constructing privacy-preserving and regulatory-compliant AI fashions. Much less actual knowledge can be required to construct dependable and correct AI fashions for id verification, whereas bettering the standard and robustness of the algorithms.”

Marco Cuomo of Cuomo IT Consulting mentioned: “ACHILLES supplies a set of instruments and reusable frameworks that allow pharma-driven, AI-based initiatives to deal with their domain-specific experience. This considerably accelerates total mission timelines and facilitates compliant operations.”

Nuno Gonçalves from the Institute of Programs and Robotics at College of Coimbra mentioned: “A key side that the ACHILLES mission will convey is the best way analysis establishments can collaborate with industrial companions to enhance ML fashions on each ends, collaboratively, whereas respecting privateness and safety rights of information house owners.”

From rule-book to actuality

With the EU AI Act guiding design decisions throughout ACHILLES and its use instances, the consortium required a rigorous authorized framework early within the mission. Deliverable ‘D4.1 Authorized & Moral Mapping’ aligns every related norm (AI Act, GDPR, Information Act, Medical System Regulation) with the ACHILLES IDE and the validation pilots. The companion deliverable, ‘D4.4 Ethics Tips’, turns that map into checklists, consent templates, and bias-audit scripts.

D4.1 presents the preliminary authorized evaluation of European and worldwide authorized and moral frameworks related to the mission. In addition to specializing in basic rights, AI regulation (with a deal with the EU AI Act) and privateness and knowledge safety beneath the GDPR, it additionally examines broader European legislative devices regarding knowledge governance (Information Act, Information Governance Act, and Widespread European Information Areas), info society companies (Digital Providers Act and Digital Markets Act), cybersecurity (NIS2 Directive, EU Cybersecurity Act, and EU Cyber Resilience Act), sector-specific authorized necessities (Medical Gadgets Regulation, and mental property rights laws). Moral issues, together with the significance of knowledgeable consent for analysis contributors, accuracy challenges related to facial recognition and verification, algorithmic biases, hallucinations in generative AI, and broader issues about trustworthiness, spherical out the evaluation. Collectively, these parts present companions with an preliminary guidelines of ‘what applies and why’, which may be refined as technical particulars emerge in the course of the mission.

To make sure that paper guidelines survive their preliminary interplay with AI researchers and engineers, KU Leuven’s CiTiP workforce performed an inside workshop in June 2025. Every pilot accomplished a complete authorized questionnaire, enabling the session to drill down into particular selections and gaps. The primary workshop was an inside occasion, restricted to mission companions, which supplied them with the chance to ask questions and higher outline the applicability of various authorized frameworks and necessities. The outcomes of the workshop immediately inform the subsequent iteration of use case definition and assist the pilot execution part. Related workshops, together with public ones, will observe in the course of the mission.

Though the confirmed danger stage could change because the pilot design matures, we share right here our provisional view of the AI Act per use case.

The ACHILLES IDE itself can be thought of a limited-risk AI system beneath the AI Act, which suggests it should inform customers that they’re interacting with software program quite than a human. Every time the mission handles private knowledge, particularly biometric or well being knowledge, as within the pilots, the GDPR additionally applies. As a result of these knowledge are labeled as ‘particular classes’, processing is allowed solely beneath particular exceptions, reminiscent of specific consent or use for scientific analysis, and it might set off a compulsory Information Safety Influence Evaluation (DPIA).

Though the preliminary evaluation means that the DA, DGA, DSA, and DMA could also be much less related to the mission, the cybersecurity necessities within the EU Cyber Resilience Act might be relevant, notably for the healthcare use case. Lastly, moral issues, reminiscent of algorithmic biases, hallucinations, automation bias, and total trustworthiness, needs to be fastidiously addressed. These issues can be additional refined and built-in because the mission progresses, and extra info turns into out there.

The mission follows an iterative compliance loop, incorporating authorized, moral, and technical necessities all through the AI growth lifecycle. The loop consists of 4 phases:

- Map, establish and analyse relevant norms, obligations, and moral guardrails.

- Design and construct AI programs which can be reliable, clear, and compliant, with the assist of the ACHILLES IDE.

- Conduct pilot checks in real-world settings to guage technical efficiency and compliance outcomes, with validation by measurable KPIs.

- Refine and replace the authorized and moral mapping based mostly on pilot suggestions and developments, and improve the supporting instruments, restarting the loop.

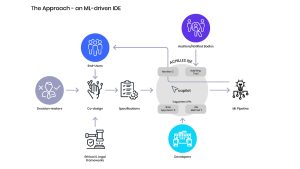

Compliance made straightforward: The ACHILLES IDE

At the moment, groups aiming to construct EU-compliant AI should juggle laws, spreadsheets, and 300-page PDFs. The ACHILLES Built-in Growth Surroundings (IDE) combines specs, code, documentation, and proof in a single workspace, pushed by a specification-first strategy. This paradigm brings the rigour of well-documented software program engineering: begin with the enterprise, authorized and moral necessities, then let the IDE scaffold the code and proofs, with a strong Copilot to information you all through.

The ACHILLES IDE acts as a basis for unifying analysis outcomes within the reliable AI house into coherent, compliant, and environment friendly growth pipelines.

The way it works – A hypothetical mission

1. By way of a set of (value-sensitive) co-design finest practices, processes, and instruments, outline the mission objectives and, involving as many stakeholders as attainable, provide you with a set of specs.

- Primarily based on the mission description and specs, the IDE’s Copilot iteratively makes suggestions at totally different ranges of the mission’s lifecycle, from compliance checklists to technical instruments (e.g., a particular bias auditing methodology relevant to medical photographs). The framework’s revolutionary Customary Working Procedures language (SOP Lang) permits versatile but controllable workflows, designed for each human and AI brokers to collaborate. Moreover, each supervisor’s and developer’s selections are logged for improved transparency.

- Monitor the coaching and get assist constructing clear and semi-automated documentation, like Mannequin Playing cards. After deployment, proceed to observe the system for drifts and potential efficiency deterioration that will set off the necessity for retraining.

- Export proof based mostly on semi-automated documentation and allow seamless auditing trails, successfully bridging the hole between decision-makers, builders, end-users, and notified our bodies.

Trying forward

Because the mission nears the completion of its first yr, the use instances are concluding the pilot definition, together with the analysis framework to evaluate the affect of ACHILLES of their workflows. The technical work is attending to full velocity with the assorted supporting toolkits (e.g., bias, explainability, robustness) and the implementation of the ACHILLES IDE.

Multidisciplinary alignment workshops are key, and new occasions are deliberate to accompany the continued analysis, with some occasions open to exterior contributors. These periods will discover matters reminiscent of explainability, human oversight, bias mitigation, and compliance verification.

Within the background, ACHILLES is teaming up with the remaining CL4-2024-DATA-01-01 ‘AI-driven knowledge operations and compliance applied sciences’ initiatives: ACCOMPLISH, CERTAIN, and DATA-PACT. The purpose is to leverage collaboration by joint workshops, shared open-source tooling, and cross-dissemination.

The stakes are clear: by the point the AI Act is totally enforceable, ACHILLES goals to show that reliable, greener AI shouldn’t be a bureaucratic burden however a aggressive edge for European innovators.

Disclaimer:

This mission has obtained funding from the European Union’s Horizon Europe analysis and innovation programme beneath Grant Settlement No 101189689.

innovation programme beneath Grant Settlement No 101189689.

Please word, this text may even seem within the twenty third version of our quarterly publication.