Rapt AI, a supplier of AI-powered AI-workload automation for GPUs and AI accelerators, has teamed with AMD to reinforce AI infrastructure.

The long-term strategic collaboration goals to enhance AI inference and coaching workload administration and efficiency on AMD Intuition GPUs, providing prospects a scalable and cost-effective resolution for deploying AI purposes.

As AI adoption accelerates, organizations are grappling with useful resource allocation, efficiency bottlenecks, and complicated GPU administration.

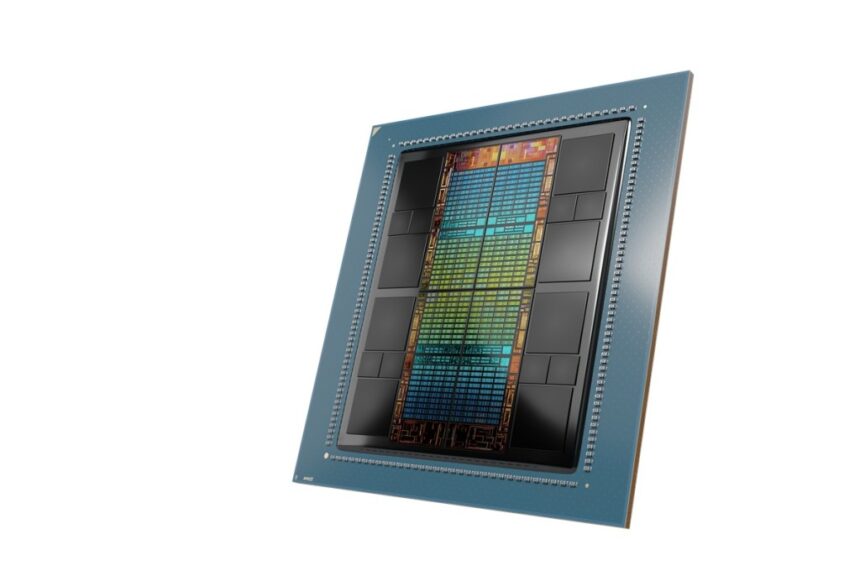

By integrating Rapt’s clever workload automation platform with AMD Intuition MI300X, MI325X and upcoming MI350 sequence GPUs, this collaboration delivers a scalable, high-performance, and cost-effective resolution that allows prospects to maximise AI inference and coaching effectivity throughout on-premises and multi-cloud infrastructures.

A extra environment friendly resolution

Charlie Leeming, CEO of Rapt AI, stated in a press briefing, “The AI fashions we’re seeing immediately are so giant and most significantly are so dynamic and unpredictable. The older instruments for optimizing don’t actually match in any respect. We noticed these dynamics. Enterprises are throwing plenty of cash. Hiring a brand new set of expertise in AI. It’s one among these disruptive applied sciences. We now have a state of affairs the place CFOs and CIOs are asking the place is the return. In some instances, there may be tens of thousands and thousands, tons of of thousands and thousands or billions of {dollars} spend on GPU-related infrastructure.”

Leeming stated Anil Ravindranath, CTO of Rapt AI, noticed the answer. And that concerned deploying screens to allow observations of the infrastructure.

“We really feel we have now the correct resolution on the proper time. We got here out of stealth final fall. We’re in a rising variety of Fortune 100 corporations. Two are working the code amongst cloud service suppliers,” Leeming stated.

And he stated, “We do have strategic companions however our conversations with AMD went extraordinarily nicely. They’re constructing large GPUs, AI accelerators. We’re recognized for placing the utmost quantity of workload on GPUs. Inference is taking off. It’s in manufacturing stage now. AI workloads are exploding. Their information scientists are working as quick as they will. They’re panicking, they want instruments, they want effectivity, they want automation. It’s screaming for the correct resolution. Inefficiencies — 30% GPU underutilization. Prospects do need flexibility. Giant prospects are asking when you assist AMD.”

Enhancements that may take 9 hours might be performed in three minutes, he stated. Ravindranath stated in a press briefing the Rapt AI platform allows as much as 10 occasions mannequin run capability on the similar AI compute spending degree, as much as 90% value financial savings, and 0 people in a loop and no code modifications. For productiveness, this implies no extra ready for compute and time spent tuning infrastructure.

Lemming stated different methods have been round for some time and haven’t lower it. Run AI, a rival, overlaps in a aggressive manner considerably. He stated his firm observes in minutes as an alternative of hours after which optimizes the infrastructure. Ravindranath stated Run AI is extra like a scheduler however Rapt AI positions itself for unpredictable outcomes and offers with it.

“We run the mannequin and determine it out, and that’s an enormous profit for inference workloads. It ought to simply routinely run,” Ravindranath stated.

The advantages: decrease prices, higher GPU utilization

The businesses stated that AMD Intuition GPUs, with their industry-leading reminiscence capability, mixed with

Rapt’s clever useful resource optimization, helps guarantee most GPU utilization for AI workloads, serving to decrease complete value of possession (TCO).

Rapt’s platform streamlines GPU administration, eliminating the necessity for information scientists to spend worthwhile time on trial-and-error infrastructure configurations. By routinely optimizing useful resource allocation for his or her particular workloads, it empowers them to concentrate on innovation slightly than infrastructure. It seamlessly helps numerous GPU environments (AMD and others, whether or not within the cloud, on premises or each) by way of a single occasion, serving to guarantee most infrastructure flexibility.

The mixed resolution intelligently optimizes job density and useful resource allocation on AMD Intuition GPUs, leading to higher inference efficiency and scalability for manufacturing AI deployments. Rapt’s auto-scaling capabilities additional assist guarantee environment friendly useful resource use based mostly on demand, lowering latency and maximizing value effectivity.

Rapt’s platform works out-of-the-box with AMD Intuition GPUs, serving to guarantee fast efficiency advantages. Ongoing collaboration between Rapt and AMD will drive additional optimizations in thrilling areas similar to GPU scheduling, reminiscence utilization and extra, serving to guarantee prospects are geared up with a future prepared AI infrastructure.

“At AMD, we’re dedicated to delivering high-performance, scalable AI options that empower organizations to unlock the total potential of their AI workloads.” stated Negin Oliver, company vice chairman of enterprise growth for information middle GPU enterprise at AMD, in an announcement. “Our collaboration with Rapt AI combines the cutting-edge capabilities of AMD Intuition GPUs with Rapt’s clever workload automation, enabling prospects to realize higher effectivity, flexibility, and price financial savings throughout their AI infrastructure.”