Think about performing a sweep round an object along with your smartphone and getting a sensible, absolutely editable 3D mannequin you could view from any angle. That is quick turning into actuality, because of advances in AI.

Researchers at Simon Fraser College (SFU) in Canada have unveiled new AI expertise for doing precisely this. Quickly, slightly than merely taking 2D pictures, on a regular basis customers will be capable of take 3D captures of real-life objects and edit their shapes and look as they want, simply as simply as they might with common 2D pictures right this moment.

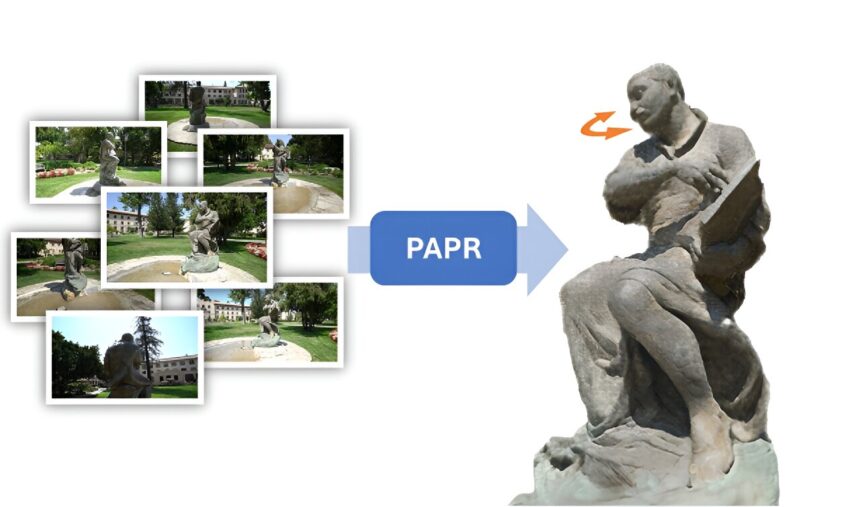

In a new paper showing on the arXiv preprint server and introduced on the 2023 Conference on Neural Information Processing Systems (NeurIPS) in New Orleans, Louisiana, researchers demonstrated a brand new approach referred to as Proximity Attention Point Rendering (PAPR) that may flip a set of 2D pictures of an object right into a cloud of 3D factors that represents the article’s form and look.

Every level then provides customers a knob to regulate the article with—dragging some extent adjustments the article’s form, and modifying the properties of some extent adjustments the article’s look. Then in a course of often known as “rendering,” the 3D level cloud can then be considered from any angle and become a 2D photograph that exhibits the edited object as if the photograph was taken from that angle in actual life.

Utilizing the brand new AI expertise, researchers confirmed how a statue might be delivered to life—the expertise routinely transformed a set of pictures of the statue right into a 3D level cloud, which is then animated. The top result’s a video of the statue turning its head back and forth because the viewer is guided on a path round it.

“AI and machine studying are actually driving a paradigm shift within the reconstruction of 3D objects from 2D pictures. The exceptional success of machine studying in areas like laptop imaginative and prescient and pure language is inspiring researchers to analyze how conventional 3D graphics pipelines might be re-engineered with the identical deep learning-based constructing blocks that have been answerable for the runaway AI success tales of late,” stated Dr. Ke Li, an assistant professor of laptop science at Simon Fraser College (SFU), director of the APEX lab and the senior writer on the paper.

“It seems that doing so efficiently is quite a bit more durable than we anticipated and requires overcoming a number of technical challenges. What excites me probably the most is the various potentialities this brings for shopper expertise—3D could change into as frequent a medium for visible communication and expression as 2D is right this moment.”

One of many greatest challenges in 3D is on the right way to symbolize 3D shapes in a approach that enables customers to edit them simply and intuitively. One earlier strategy, often known as neural radiance fields (NeRFs), doesn’t permit for straightforward form modifying as a result of it wants the consumer to supply an outline of what occurs to each steady coordinate. A more moderen strategy, often known as 3D Gaussian splatting (3DGS), can be not well-suited for form modifying as a result of the form floor can get pulverized or torn to items after modifying.

A key perception got here when the researchers realized that as an alternative of contemplating every 3D level within the level cloud as a discrete splat, they’ll consider every as a management level in a steady interpolator. Then when the purpose is moved, the form adjustments routinely in an intuitive approach. That is much like how animators outline the movement of objects in animated movies—by specifying the positions of objects at a number of time limits, their movement at each time limit is routinely generated by an interpolator.

Nonetheless, the right way to mathematically outline an interpolator between an arbitrary set of 3D factors will not be simple. The researchers formulated a machine studying mannequin that may be taught the interpolator in an end-to-end style utilizing a novel mechanism often known as proximity consideration.

In recognition of this technological leap, the paper was awarded with a highlight on the NeurIPS convention, an honor reserved for the highest 3.6% of paper submissions to the convention.

The analysis workforce is happy for what’s to return. “This opens the best way to many functions past what we have demonstrated,” stated Dr. Li. “We’re already exploring numerous methods to leverage PAPR to mannequin shifting 3D scenes and the outcomes up to now are extremely promising.”

The authors of the paper are Yanshu Zhang, Shichong Peng, Alireza Moazeni and Ke Li. Zhang and Peng are co-first authors, Zhang, Peng and Moazeni are Ph.D. college students on the College of Computing Science and all are members of the APEX Lab at Simon Fraser College (SFU).

Extra data:

Yanshu Zhang et al, PAPR: Proximity Consideration Level Rendering, arXiv (2023). DOI: 10.48550/arxiv.2307.11086

Quotation:

New AI expertise allows 3D seize and modifying of real-life objects (2024, March 12)

retrieved 13 March 2024

from https://techxplore.com/information/2024-03-ai-technology-enables-3d-capture.html

This doc is topic to copyright. Other than any honest dealing for the aim of personal examine or analysis, no

half could also be reproduced with out the written permission. The content material is supplied for data functions solely.