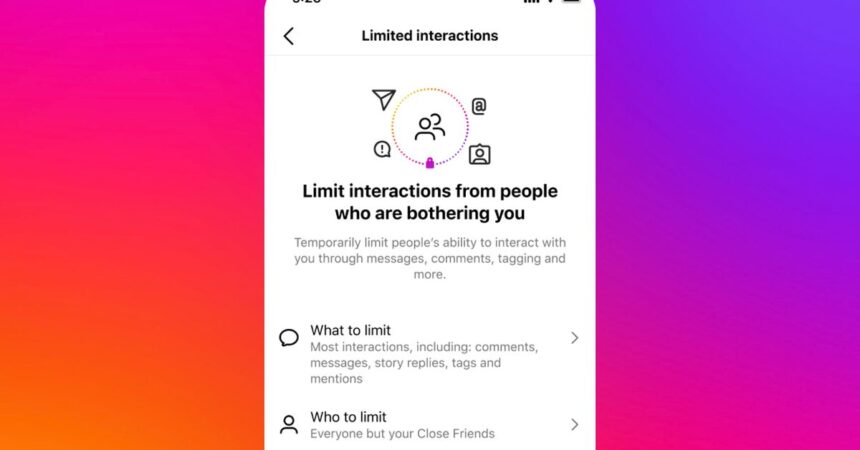

Instagram is increasing “limits,” a security management you should utilize to cover DMs and feedback from accounts who could also be harassing you. Now, as an alternative of simply hiding content material from latest followers or individuals who don’t observe you, Instagram will mute incoming messages from everybody besides the customers in your shut buddies checklist.

Though Instagram first rolled out limits to assist creators cope with harassment campaigns, anybody can use the characteristic in the event that they’re going through undesirable messages and feedback from bullies and different unhealthy actors. It may give a option to shut down incoming noise with out disconnecting folks from a supportive group.

When limiting interactions from everybody however shut buddies, you’ll solely see DMs, tags, and mentions from the folks in your checklist. Customers not in your shut buddies checklist can nonetheless work together together with your posts, however you gained’t see these updates. These accounts additionally gained’t know that you simply’ve hidden their content material. You may as well select to view or ignore the restricted feedback and DMs.

Instagram helps you to restrict accounts for as much as 4 weeks at a time, however you may lengthen it. You may allow limits by tapping your profile, deciding on the hamburger menu within the top-right nook of the display screen, and selecting Restricted interactions. From there, you may toggle on limits for Accounts that aren’t following you, Current followers, or Everybody however your Shut Associates.

Instagram can also be constructing on its limit characteristic, which now helps you to stop a consumer from tagging or mentioning you along with hiding their feedback. These expanded options come as Instagram faces elevated scrutiny from the US authorities over the security of its younger customers. Earlier this 12 months, Meta rolled out a brand new characteristic that forestalls adults from messaging minors on Instagram and Fb by default. The corporate additionally moved to cover suicide and consuming dysfunction content material from teenagers on each of its platforms.