This article was originally published in AI Business.

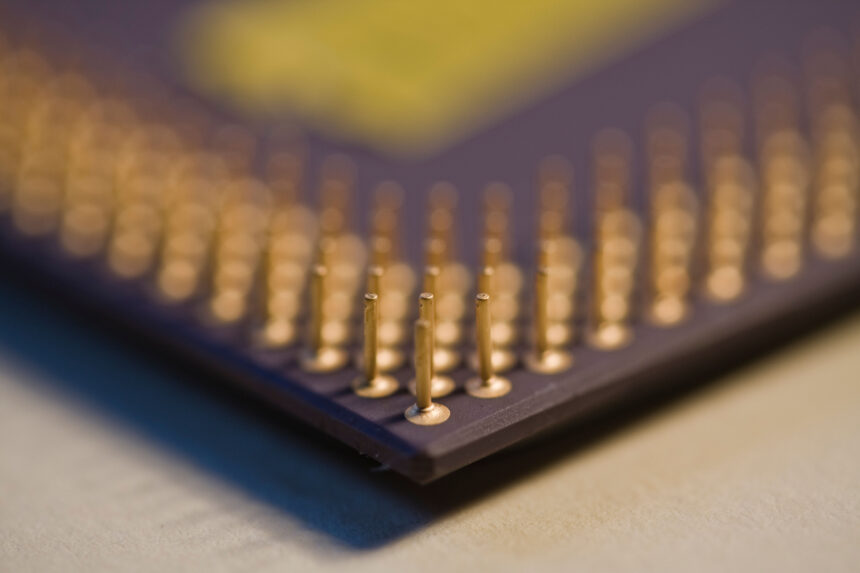

Nvidia currently leads the AI chip race, but AMD is making strides to close the gap.

Microsoft CTO Kevin Scott said recently at a conference that AMD is “making increasingly compelling GPU offerings” that could rival Nvidia. However, industry experts say AMD faces a tough challenge in the growing field of AI processors.

“Nvidia’s first and primary advantage arises from software that has been highly optimized to run on its AI chips,” Benjamin Lee, a professor in the Department of Electrical and Systems Engineering and the Department of Computer and Information Science at the University of Pennsylvania, said in an interview.

The race for chips

Since OpenAI introduced ChatGPT last year, there has been a surge of interest in large language models (LLMs) that demand huge computational resources. Although companies like Google, Amazon, Meta, and IBM have developed AI chips, Nvidia currently dominates with over 70% of AI chip sales, according to sister research firm Omdia.

One secret to Nvidia’s success is its software. The company has developed its CUDA application for performing more general types of computation on its graphics processors, Lee said. The Nvidia CUDA software libraries are well integrated with the most popular programming languages for machine learning, such as PyTorch. Another Nvidia advantage is high-speed networks and system architectures connecting multiple GPUs.

“These networks permit fast, efficient coordination when a model is too large to run on a single GPU, permitting the construction of larger, faster AI supercomputers,” he added.

Nvidia’s new GPU, the H100, designed for AI applications and that started shipping in September, has witnessed soaring demand. Companies of all sizes are hustling to secure these chips, produced through an advanced manufacturing technique and requiring intricate packaging that pairs GPUs with specific memory chips.

Industry leaders anticipate the H100 shortage to persist through 2024. The lack of supply poses challenges for AI startups and cloud services aiming to market computing services leveraging these new GPUs.

Meanwhile, other companies are trying to take advantage of the H100 shortfall. SambaNova Systems has rolled out a new chip, the SN40L, designed for LLMs. The SN40L can supposedly handle a 5 trillion parameter model and support over 256k sequence length, all on a single system for better model quality and faster outcomes at a lower price than Nvidia’s offerings. SambaNova claims its chip’s vast memory can juggle multiple tasks, like searching, analyzing, and generating data, making it versatile for various AI applications.

How AMD could catch up

Despite AMD’s slow start, observers say there is still hope for the company to catch up with Nvidia. In June, AMD shared plans to begin testing its MI300X chip with clients during the third quarter, a GPU crafted explicitly for AI computations.

AMD has not shared a price or the new chip yet, but the move could push Nvidia to lower the cost of its GPUs, like the H100, which can be expensive, often exceeding $30,000. Cheaper GPUs could make running generative AI applications more affordable.

The MI300X can handle larger AI models than other chips because it can use up to 192GB of memory. For comparison, Nvidia’s H100 supports only 120GB of memory.

Big AI models need lots of memory because they do many calculations. AMD demonstrated the MI300X running a Falcon model with 40 billion parameters. By comparison, OpenAI’s GPT-3 model has 175 billion parameters.

However, hardware alone may not be enough for AMD to seize a larger market share. By applying neuroscience principles to AI, it is now possible to run LLMs on CPUs for better performance, improved energy and power consumption, and substantial cost savings, Subutai Ahmad, CEO of Numenta, said in an interview.

“AMD should incorporate these techniques into their software stack in order to compete with Nvidia,” he added.

One advantage AMD already has is that its open-source software stack ROCm allows machine learning to run more effectively on its chips. But this framework is much less popular than CUDA, Lee noted. He said software support is the most crucial determinant of machine learning performance.

“No other company in the industry, (for example) AMD’s graphics processors, Google’s tensor processing unit, has a similarly mature, optimized software ecosystem,” he added.

Other companies are not standing still. Google’s Tensor Processing Unit (TPU) is a notable chip design, while Cerebras designs larger chips with increased memory to enhance hardware performance, Lee said.

“But all of these alternatives suffer the same or more severe software challenges as AMD,” he added. “TPUs are more energy-efficient for machine learning, and Cerebras has demonstrated high performance for scientific applications. But without a mature software stack, developers cannot easily download and run the latest open-source machine learning models, like those from Hugging Face, with competitive performance.”

At some point, generative AI may no longer need to rely on GPUs. Ahmad predicted that companies will one day turn to cheaper CPUs that provide high throughput and low-latency results for even the most sophisticated, complex natural language processing (NLP) applications.

“CPUs are more flexible than GPUs, too,” he added. “CPUs are designed for general-purpose tasks and do not rely on batching for performance. Their adaptable nature and simpler infrastructure make them incredibly flexible, scalable, and cost-effective.”