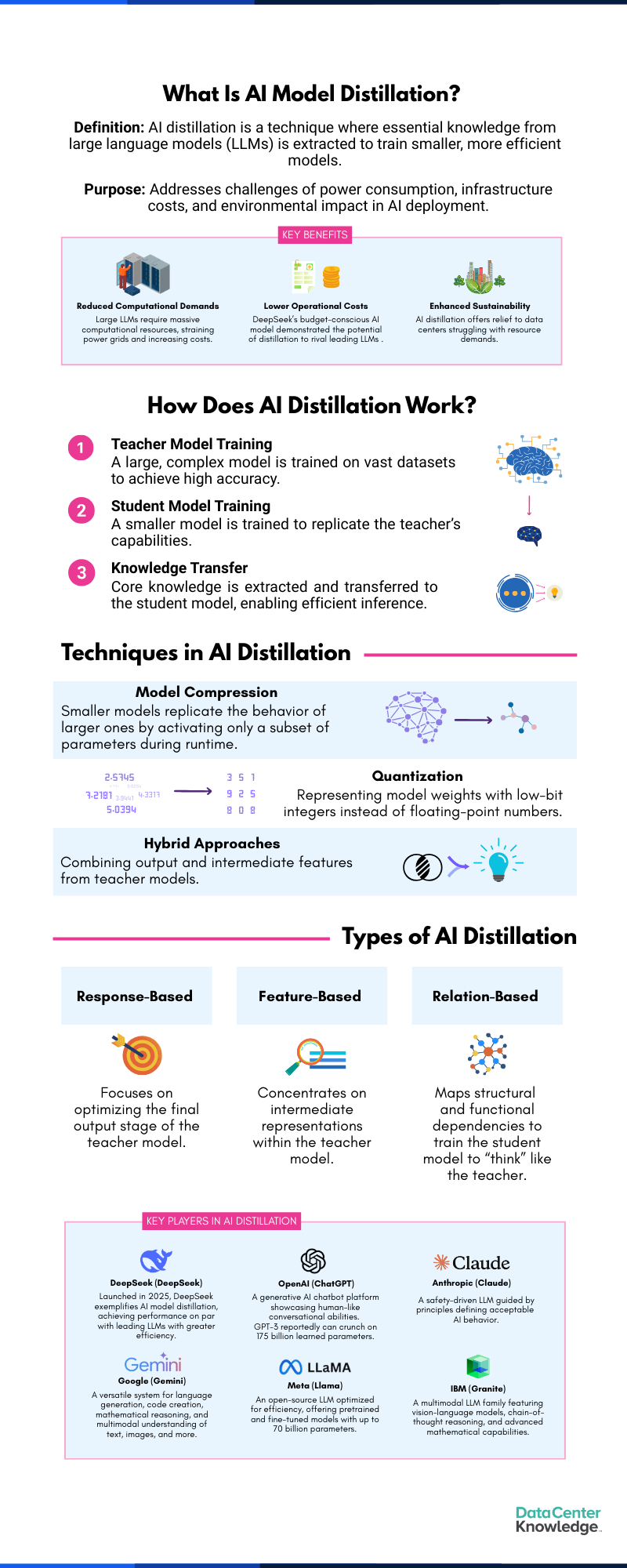

Giant language fashions (LLMs) are inserting unprecedented calls for on information facilities, pushing infrastructure to its limits. AI distillation gives a breakthrough answer to this problem. The approach tackles vital problems with scalability and sustainability head-on by condensing large AI techniques into smaller, extra environment friendly fashions.

The Rise of AI Mannequin Distillation

AI distillation gained extensive reputation in January 2025 when the Chinese language AI analysis firm DeepSeek launched a surprisingly budget-conscious AI mannequin. The system reportedly required considerably much less computing energy than earlier LLMs from AI analysis startups akin to OpenAI and main hyperscalers. Whereas the benchmarks for DeepSeek stay a subject of debate on the time of this writing, its launch heralded one thing of a sea change for the AI trade.

Key AI mannequin distillation phrases embody instructor mannequin, pupil mannequin, data switch, and quantization. Picture: DCN.

DeepSeek’s designers used a full toolbox of methods to create a cheap AI mannequin. These included decreased floating-point precision and hand-optimizing Nvidia GPU instruction set structure. Central to their work was AI mannequin distillation, a course of impressed by numerous software program structure rules that prioritize effectivity.

What distinguished DeepSeek’s method was its efficient implementation of selective parameter activation. Whereas not a novel idea in AI analysis, DeepSeek leveraged this system to dynamically work with fewer neural community weights and apply them to fewer tokens throughout particular operational phases. This allowed the smaller “pupil“ mannequin to successfully replicate the capabilities of a bigger, extra advanced “instructor” mannequin, showcasing a sensible and economical software of established methodologies.

Understanding AI Distillation

AI mannequin distillation permits smaller fashions to “study” from bigger ones by extracting and transferring key parts akin to probabilistic outputs, intermediate options, and structural relationships. As Anant Jhingran, IBM fellow and CTO for software program, defined to DCN at IBM Suppose 2025, “Essentially, AI distillation is about taking a big mannequin corpus, getting the essence out of it, and educating it to a small mannequin.”

The method usually consists of three steps:

-

Trainer mannequin coaching: A big, advanced mannequin (the instructor) is educated on huge datasets to attain excessive efficiency and accuracy.

-

Scholar mannequin coaching: A smaller, extra resource-efficient model (the coed) is educated to duplicate the instructor’s capabilities.

-

Information switch: The final step entails transferring the instructor mannequin’s data to the coed mannequin. This step is very nuanced and is certainly not a easy “information dump.”

Throughout runtime, distilled fashions function with a decreased set of parameters in comparison with their bigger counterparts, enabling extra environment friendly inference. Their small measurement and optimized structure lead to decrease useful resource calls for throughout processing, providing much-needed reduction to information facilities buckling underneath the burden of AI’s useful resource calls for.

Distillation Methods and Approaches

The overarching goal of AI distillation is to cut back the mannequin measurement and complexity whereas sustaining excessive efficiency. This may be pursued by numerous methods:

-

Response-Based mostly Mannequin Distillation: Optimizes primarily based on chance scores of the instructor mannequin’s remaining outputs somewhat than inside reasoning processes. For instance, the coed mannequin learns to foretell output likelihoods, such because the chance of a phrase showing in a sentence.

-

Function-Based mostly Mannequin Distillation: Focuses on transferring data from intermediate representations inside the instructor mannequin’s “hidden layers,” the place options are processed and extracted from enter information.

-

Relation-Based mostly Mannequin Distillation: Maps the structural and practical dependencies underlying the instructor mannequin’s reasoning. The coed mannequin learns how the instructor connects completely different items of data to succeed in conclusions.

-

Blended Methods Distillation: Combines each the output and intermediate representations from the instructor mannequin, offering the coed with insights into each conclusions and analytical processes

-

Self-Distillation: Permits fashions to refine their efficiency by analyzing their very own inside processes, successfully permitting the coed mannequin to behave as each pupil and instructor concurrently.

Infrastructure Challenges for AI Deployment

AI mannequin distillation, like different AI improvements, requires completely different flavors of infrastructure inside the information heart. This want developed in response to the primary rush of generative AI adoption, which launched daunting challenges.

In keeping with Venkat Rajaji, senior vp of product administration at Cloudera, AI infrastructure has turn out to be a big consideration for information heart planners and their clients. “As they give thought to compute calls for for AI, they really want to consider the price effectiveness of that and the devoted capability required,” Rajaji mentioned.

Knowledge heart planners should take into account whether or not to spend money on shared or devoted {hardware}, balancing workload utilization in opposition to capital bills. For instance, shared {hardware} within the cloud could be more cost effective for rare AI workloads, whereas devoted {hardware} is best suited to constant, high-demand purposes. “Individuals should ask if they’ve sufficient workload utilization to justify the CapEx for captive capability or not,” he mentioned. “Are they prepared to pay the expense for shared useful resource capability within the cloud for what could also be rare use?”

From an infrastructure perspective, planning for AI workloads presents tough questions, exacerbated by provide and demand points. GPUs and their supporting parts typically face shortages and delays, which may complicate useful resource allocation and improve prices.

The underlying infrastructure required for AI workloads nonetheless contains particular GPUs, quick reminiscence, shut colocation, low-latency networking, and specialised databases.

Inexpensive AI and Democratization

In keeping with Manoj Sukumaran, principal analyst of information Heart compute and networking at Omdia (a part of Informa TechTarget), computational prices can be decreased as smaller distilled fashions decrease operational bills per token of output.

“Distillation is making AI extra reasonably priced,” Sukumaran mentioned. “It performs a key function in making AI rather more ubiquitous.”

AI distillation is “mainly the way in which to go to democratize AI,” he added.

On one degree, AI distillation marks one other shift within the ongoing “language mannequin parameter race,” the place smaller models would possibly finally prevail. Smaller fashions require fewer computational assets, making them extra accessible for companies that can’t afford the infrastructure wanted for bigger fashions.

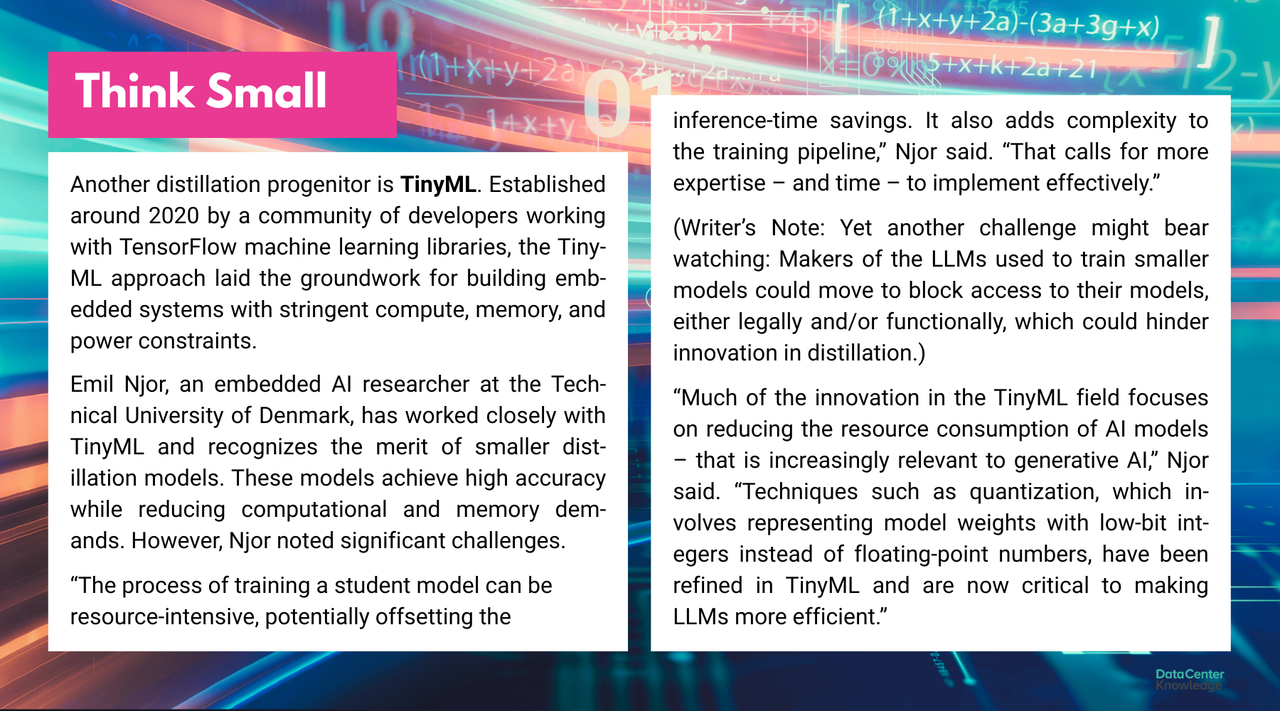

In time, some AI processing would possibly transfer from centralized information facilities to private units like PCs and smartphones. Researcher Emil Njor mentioned he envisioned such a migration forward.

“As AI analysis progresses, I hope we’ll proceed to seek out methods to make even probably the most advanced fashions environment friendly sufficient to run on private units,” he mentioned. “This could allow extra non-public, sustainable and accessible AI experiences.”

Decentralized AI might cut back reliance on giant information facilities, cut back power consumption, and provides customers extra management over their information.