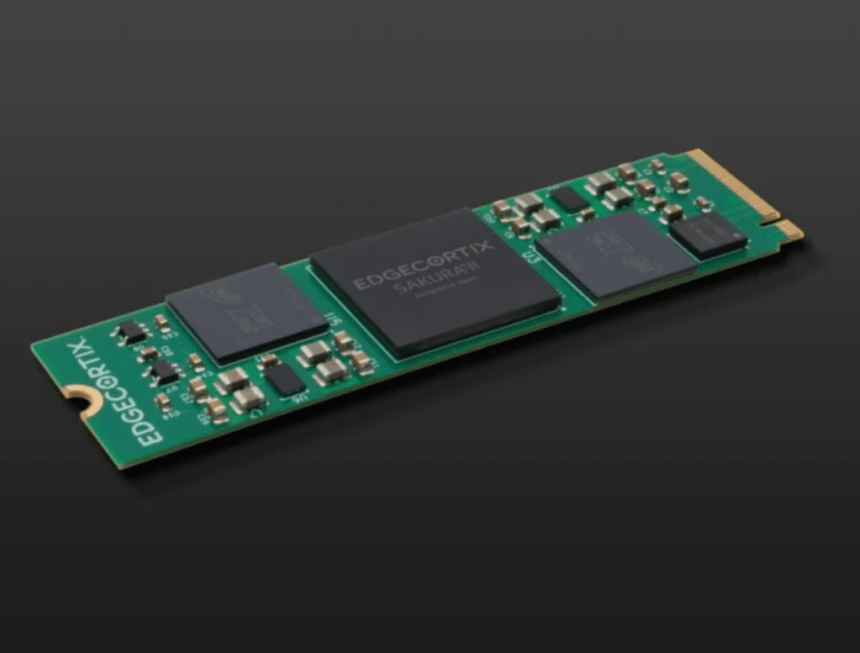

EdgeCortix, a Japanese fabless semiconductor firm, has unveiled the SAKURA-II edge AI accelerator, particularly designed to ship excessive efficiency and power effectivity for generative AI purposes on the edge.

The platform is constructed on the corporate’s proprietary second-generation Dynamic Neural Accelerator (DNA) structure, with a concentrate on addressing the complexities of Giant Language Fashions (LLMs), Giant Imaginative and prescient Fashions (LVMs), and multi-modal transformer-based purposes.

The DNA architecture is a runtime reconfigurable neural processing engine with interconnects between compute items that may be reconfigured to attain excessive parallelism and effectivity. It makes use of a patented method to reconfigure knowledge paths between the compute engines.

EdgeCortix claims that the AI accelerator can ship as much as 60 trillion operations per second (TOPS) for 8-bit integer operations and 30 trillion 16-bit floating-point operations per second (TFLOPS).

By way of reminiscence bandwidth, EdgeCortix says that the platform affords as much as 4 instances extra DRAM bandwidth in comparison with its competing AI accelerators. It additionally offers software-enabled mixed-precision help, permitting it to attain close to FP32 (32-bit floating level) accuracy.

“Whether or not working conventional AI fashions or the newest Llama 2/3, Steady-diffusion, Whisper or Imaginative and prescient-transformer fashions, SAKURA-II offers deployment flexibility at superior efficiency per watt and cost-efficiency,” says Sakyasingha Dasgupta, chief govt officer and founding father of EdgeCortix.

The efficiency of the SAKURA-II edge AI accelerator could be elevated by using the MERA software program suite, a flexible compiler platform that helps a wide range of {hardware} configurations. This software program incorporates superior quantization and mannequin calibration, leading to diminished mannequin dimension and improved inference pace with out compromising accuracy.

The SAKURA-II can implement an array of fashions, akin to Llama 2/3, Steady Diffusion, Whisper, and Imaginative and prescient Transformer fashions. Moreover, it consists of built-in reminiscence compression, which reduces reminiscence utilization and enhances general effectivity, making it notably helpful for generative AI purposes.

“We’re dedicated to making sure we meet our buyer’s diverse wants and in addition to securing a technological basis that is still sturdy and adaptable throughout the swiftly evolving AI sector,” Dasgupta provides.

The announcement follows its 2024 forecast, which predicts that environment friendly edge AI chips will considerably rework processing energy and supply tailor-made functionalities for generative AI and language fashions.

EdgeCortix’s Sakura-I chip chosen by BittWare for AI inference options

EdgeCortix raises $20 million for know-how growth and world enlargement

Associated

AI accelerators | edge AI | EdgeCortix | power effectivity