Desk of Contents

Introduction

Nvidia not too long ago made a giant splash by asserting its next-generation Blackwell GPUs, but the rest of 2024 promises to be a busy year in the data center chip market as rival chipmakers are poised to release new processors.

AMD and Intel are expected to launch new competing data center CPUs, while other chipmakers, including hyperscalers and startups, plan to unveil new AI chips to meet the soaring demand for AI workloads, analysts say.

Fittingly, Intel on Tuesday (April 9) confirmed that its new Gaudi 3 AI accelerator for AI training and inferencing is expected to be generally available during the 2024 third quarter, while Meta on Wednesday (April 10) announced that its next-generation AI inferencing processor is in production and already being used in its data centers today.

While server sales are expected to grow by 6%, from 10.8 million server shipments in 2023 to 11.5 million in 2024, server revenue is expected to jump 59% year-over-year in 2024, an indication that processors remain a hot, growing market, said Manoj Sukumaran, a principal analyst of data center IT at Omdia. In fact, over the next five years, server revenue is expected to more than double to $270 billion by 2028.

“Even though the unit shipments are not growing significantly, revenue is growing quite fast because there is a lot of silicon going inside these servers, and as a result, server prices are going up significantly,” Sukumaran told DCN. “This is a huge opportunity for silicon vendors.”

Co-Processors Are a Hot Commodity

Data center operators have a large appetite for ‘co-processors’ – microprocessors designed to supplement and augment the capabilities of the primary processor.

Traditionally, the data center server market was CPU-centric with CPUs being the most expensive component in general-purpose servers, Sukumaran said. Just over 11% of servers had a co-processor in 2020, but by 2028, more than 60% of servers are expected to include co-processors, which not only increase compute capacity but also improve efficiency, he said.

Co-processors like Nvidia H100 and AMD’s MI300 GPUs, Google Cloud’s Tensor Processing Units (TPUs), and other custom application-specific integrated circuits (ASICs) are popular because they enable AI training, AI inferencing, database acceleration, the offloading of network and security functions, and video transcoding, Sukumaran said.

Video transcoding is a process that enables Netflix, YouTube, and other streaming media to optimize video quality for different user devices, from TVs to smartphones, the analyst noted.

AMD and Intel vs. ARM CPUsThe CPU market remains lucrative. Intel is still the market share leader, but AMD and Arm-based CPUs from the likes of startup Ampere and other cloud service providers have chipped away at Intel’s dominance in recent years.

While Intel owns 61% of the CPU market, AMD has gained significant traction, growing from less than 10% of server unit shipments in 2020 to 27% in 2023, according to Omdia. Arm CPUs captured 9% of the market last year.

“The Arm software ecosystem has matured quite well over the past few years, and the lower power consumption and high-core densities of Arm CPUs are appealing to cloud service providers,” Sukumaran said.

In fact, Google Cloud on Tuesday (April 9) announced that its first Arm-based CPUs, called Google Axion Processors, will be available to customers later this year.

Intel aims to regain its footing in the CPU market this year by releasing next-generation server processors. The new Intel Xeon 6 processors with E-cores, formerly code-named ‘Sierra Forest,’ is expected to be available during the 2024 second quarter and is designed for hyperscalers and cloud service providers who want power efficiency and performance.

That will be followed soon after by the launch of the new Intel Xeon 6 processors with P-cores, formerly code-named Granite Rapids, which focuses on high performance.

AMD, however, is not sitting still and plans to release its fifth-generation EPYC CPU called Turin.

“AMD has been far and away the performance leader and has done an amazing job stealing market share from Intel,” said Matt Kimball, vice president and principal analyst at Moor Insights & Strategy. Almost all of it has been in the cloud with hyperscalers, and AMD is looking to further extend its gains with on-premises enterprises as well. 2024 is where you will see Intel emerge as competitive again with server-side CPUs from a performance perspective.”

Chipmakers Begin Focusing on AI Inferencing

Companies across all verticals are racing to build AI models, so AI training will remain huge. But in 2024, the AI inferencing chip market will begin to emerge, said Jim McGregor, founder and principal analyst at Tirias Research.

“There is a shift toward inferencing processing,” he said. “We’re seeing a lot of AI workloads and generative AI workloads come out. They’ve trained the models. Now, they need to run them over and over again, and they want to run those workloads as efficiently as possible. So expect to see new products from vendors.”

Nvidia dominates the AI space with its GPUs, but AMD has produced a viable competitive offering with the December release of its Instinct MI300 series GPU for AI training and inferencing, McGregor said.

While GPUs and even CPUs are used for both training and inferencing, an increasing number of companies – including Qualcomm, hyperscalers like Amazon Web Services (AWS), and Meta, and AI chip startups like Groq, Tenstorrent, and Untether AI – have built or are developing chips specifically for AI inferencing . Analysts also say these chips are more energy-efficient.

When organizations deploy a Nvidia H100 or AMD MI300, those GPUs are well-suited for training because they are big, with a large number of cores, and are high-performing with high-bandwidth memory, Kimball said.

“Inferencing is a more lightweight task. They don’t need the power of an H100 or MI300,” he said.

Top Data Center Chips for 2024 – An Expanding List

Here’s a list of processors that are expected to come out in 2024. DCN will update this story as companies make new announcements and release new products.

AMD

AMD Instinct MI300X

AMD plans to launch Turin, its next-generation server processor, during the second half of 2024, AMD CEO Lisa Su told analysts during the company’s 2023 fourth-quarter earnings call in January. Turin is based on the company’s new Zen 5 core.

“Turin is a drop-in replacement for existing 4th Gen EPYC platforms that extends our performance, efficiency and TCO leadership with the addition of our next-gen Zen 5 core, new memory expansion capabilities, and higher core counts,” she said on the earnings call.

No specific details of Turin are available. But Kimball, the Moor Insights & Strategy analyst, said Turin will be significant. “AMD will look to further differentiate themselves from Intel from a performance and performance-per-watt perspective,” he said.

AMD has also seen huge demand for its Instinct MI300 accelerators, including the MI300X GPU, since their launch in December. The company plans to aggressively ramp up production of the MI300 this year for cloud, enterprise and supercomputing customers, Su said during the earnings call.

Intel

Intel 5th Gen Xeon chip

Intel executives plan to release several major chips this year: its Gaudi 3 AI accelerator and next-generation Xeon server processors.

Gaudi 3 shall be for AI coaching and inferencing, and is aimed on the enterprise market. It’s designed to compete towards Nvidia and AMD’s GPUs. The AI chip will provide 4 instances extra AI compute and 1.5 instances extra reminiscence bandwidth than its predecessor, the Gaudi 2, Intel executives stated at its Intel Imaginative and prescient 2024 occasion in Phoenix this week.

Gaudi 3 is projected to deliver 50% quicker coaching and inferencing instances and 40% higher energy effectivity for inferencing when in comparison with Nvidia’s H100 GPU, Intel executives added.

“That is going to be aggressive with huge energy financial savings and a lower cost,” stated Kimball, the analyst.

As for its next-generation Intel Xeon 6 processors, Sierra Forest will embody a model that options 288 cores, which might be the biggest core rely within the trade. It’s additionally the corporate’s first “E-core” server processor designed to stability efficiency with vitality effectivity.

Granite Rapids is a “P-core” server processor that’s designed for greatest efficiency. It would provide two to a few instances higher efficiency for AI workloads over Sapphire Rapids, the corporate stated.

Gaudi 3 shall be accessible to OEMs throughout the 2024 second quarter with normal availability anticipated throughout the third quarter, an Intel spokesperson stated. Sierra Forest, now referred to as Intel Xeon 6 processors with E-cores, is anticipated to be accessible throughout the 2024 second quarter. Granite Rapids, now referred to as Intel Xeon 6 processors with P-cores, is anticipated to launch “quickly after,” an Intel spokesperson stated.

The information follows Intel’s launch of its fifth-generation Xeon CPU final 12 months.

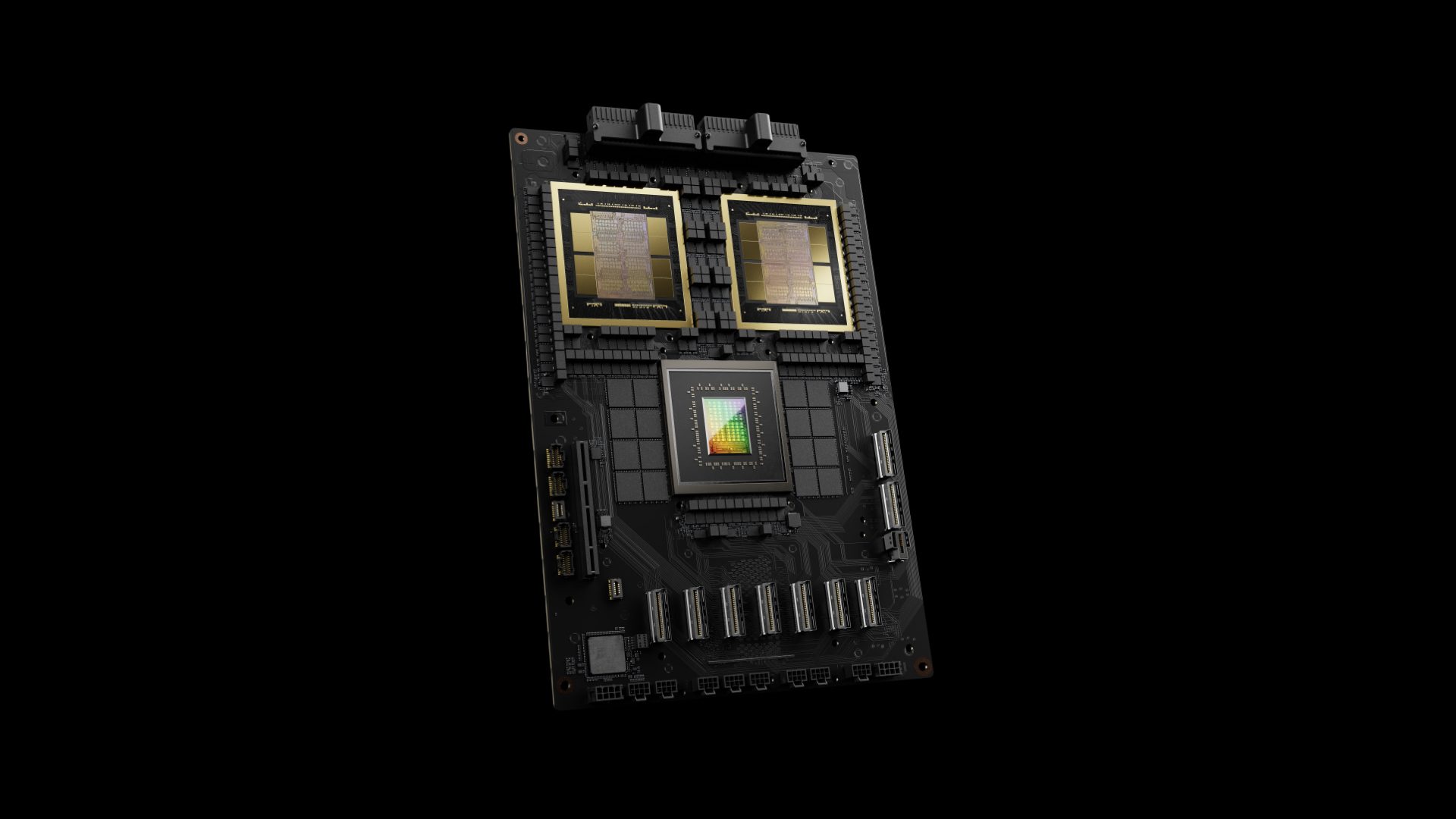

Nvidia

Nvidia GB200 Grace Blackwell

Nvidia in mid-March introduced that it’s going to begin transport next-generation Blackwell GPUs later this 12 months, which analysts say will allow the chip large to proceed to dominate the AI chip market.

Nvidia will also ship the Hopper-based H200 during the 2024 second quarter. The company recently announced new benchmarks showing it’s the most powerful platform for running generative AI workloads. An H200 will perform 45% faster than an H100 whereas inferencing a 70 billion parameter Llama 2 mannequin, the corporate stated.

Ampere

AmpereOne Chip

Ampere didn’t reply to DCN’s request for info on its chip plans for 2024. However final Could, the startup, led by former Intel president Renee James, introduced a brand new household of custom-designed, Arm-compatible server processors that characteristic as much as 192 cores.

That processor, referred to as AmpereOne, is designed for cloud service providers and simultaneously delivers high performance with high power efficiency, company executives said.

AWS

AWS Trainium2

AWS is among the hyperscalers that partner with large chipmakers such as Nvidia, AMD and Intel and use their processors to provide cloud services for customers. But they also find it advantageous and cost-effective to build their own custom chips to power their own data centers and provide cloud services to customers.

AWS this year will launch Graviton4, an Arm-based CPU for general-purpose workloads and Tranium2 for AI coaching. Final 12 months, it additionally unveiled Inferentia2, its second-generation AI inferencing chips, stated Gadi Hutt, senior director of product and enterprise improvement at AWS’ Annapurna Labs.

“Our aim is to offer clients the liberty of selection and provides them high-performance at considerably decrease price,” Hutt stated.

Tranium2 will characteristic 4 instances the compute and thrice the reminiscence of its first Tranium processor. Whereas AWS makes use of the primary Tranium chip in a cluster of 60,000 chips, Tranium2 shall be accessible in a cluster of 100,000 chips, Hutt stated.

Microsoft Azure

Microsoft Azure Maia 100 AI Accelerator

Microsoft not too long ago introduced the Microsoft Azure Maia 100 AI Accelerator for AI and generative AI duties and Cobalt 100 CPU, an Arm-based processor for general-purpose compute workloads.

The corporate in November stated it might start rolling out the 2 processors in early 2024 to initially energy Microsoft providers, reminiscent of Microsoft Copilot and Azure OpenAI Service.

The Maia AI accelerator is designed for each AI coaching and inferencing, whereas the Cobalt CPU is an energy-efficient chip that’s designed to ship good performance-per-watt, the corporate stated.

Google Cloud

Google Axion Processor

Google Cloud is a trailblazer amongst hyperscalers, having first launched its {custom} Tensor Processing Items (TPUs) in 2013. TPUs, designed for AI coaching and inferencing, can be found to clients on the Google Cloud. The processors additionally energy Google providers, reminiscent of Search, YouTube, Gmail and Google Maps.

The corporate launched its fifth-generation TPU late final 12 months. The Cloud TPU v5p can prepare fashions 2.8 instances quicker than its predecessor, the corporate stated.

Google Cloud on Tuesday (April 10) introduced that it has developed its first Arm-based CPUs, referred to as the Google Axion Processors. The brand new CPUs, constructed utilizing the Arm Neoverse V2 CPU, shall be accessible to Google Cloud clients later this 12 months.

The corporate stated clients will have the ability to use Axion in lots of Google Cloud providers, together with Google Compute Engine, Google Kubernetes Engine, Dataproc, Dataflow and Cloud Batch.

Kimball, the analyst, expects AMD and Intel will take a income hit as Google Cloud begins to deploy its personal CPU for its clients.

“For Google, it’s a narrative of ‘I’ve received the proper efficiency on the proper energy envelope on the proper price construction to ship providers to my clients. That’s why it’s necessary to Google,” he stated. “They give the impression of being throughout their information middle. They’ve an influence funds, they’ve sure SLAs, they usually have sure efficiency necessities they’ve to fulfill. They designed a chip that meets all these necessities very particularly.”

Meta

Meta has deployed a next-generation {custom} chip for AI inferencing at its information facilities this 12 months, Meta introduced on Wednesday (April 10).

The following-generation AI inferencing chip, beforehand code-named Artemis, is a part of the corporate’s Meta Coaching and Inference Accelerator (MTIA) household of custom-made chips designed for Meta’s AI workloads.

Meta launched its first-generation AI inferencing chip, MTIA v1, final 12 months. The brand new next-generation chip affords thrice higher efficiency and 1.5 instances higher performance-per-watt over the first-generation chip, the corporate stated.

Cerebras

AI {hardware} startup Cerebras Methods introduced its third-generation AI processor, the WSE-3, in mid-March. The wafer-sized chip doubles the performance of its predecessor and competes against Nvidia in the high-end of the AI training market.

The company in mid-March also partnered with Qualcomm to provide AI inferencing to its customers. Models trained on Cerebras’ hardware are optimized to run inferencing on Qualcomm’s Cloud A100 Ultra accelerator.

Groq

Groq is a Mountain View, California-based AI chip startup that has constructed the LPU Inference Engine to run giant language fashions, generative AI purposes and different AI workloads.

Groq, which launched its first AI inferencing chip in 2020, is focusing on hyperscalers, enterprises, the general public sector, AI startups and builders. The corporate will launch its next-generation chip in 2025, an organization spokesperson stated.

Tenstorrent

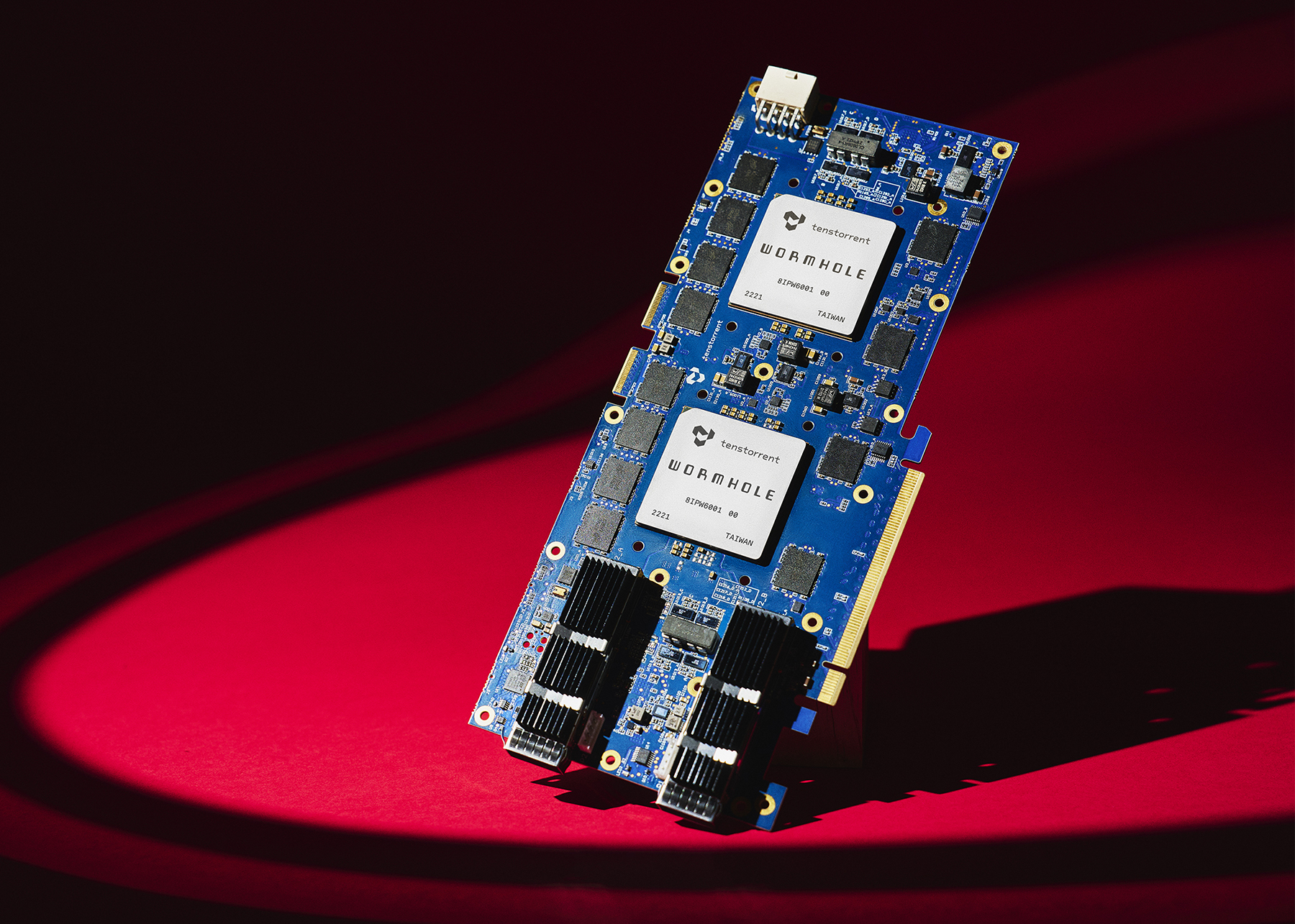

Tenstorrent Wormhole Two Chip

Tenstorrent is a Toronto-based AI inferencing startup with a powerful pedigree: its CEO is Jim Keller, a chip architect who has labored at Apple, AMD, Tesla and Intel and helped design AMD’s Zen structure and chips for early Apple iPads and iPhones.

The corporate has begun taking orders of its Wormhole AI inferencing chips this 12 months with a proper launch later this 12 months, stated Bob Grim, Tenstorrent’s vp of technique and company communications.

Tenstorrent is promoting servers powered by 32 Wormhole chips to enterprises, labs and any group that wants high-performance computing, he stated. Tenstorrent is at present targeted on AI inferencing, however its chips also can energy AI coaching, so the corporate plans to additionally help AI coaching sooner or later, Grim stated.

Untether AI

Untether AI is a Toronto-based AI chip startup that builds chips for energy-efficient AI inferencing.

The corporate – whose president is Chris Walker, a former Intel company vp and normal supervisor – shipped its first product in 2021 and plans to make its second-generation SpeedAI240 chip accessible this 12 months, an organization spokesperson stated.

Untether AI’s chips are designed for a wide range of type elements, from single-chip units for embedded purposes to 4-chip, PCI-Categorical accelerator playing cards, so its processors are used from the sting to the information middle, the spokesperson stated.

I appreciate the effort put into this post.