Interest in containers and container platforms exploded in the second half of the 2010 decade. Amid this surging popularity, containers have become the third runtime for hosting applications besides Linux and Windows virtual machines (VMs).

In this guide, we explore the benefits of running containers in the cloud and take a closer look at how to make security work for containerized workloads

How to Run Containers in the Cloud

Compared to traditional applications, container images consist of not only the application but also incorporate all dependencies, including system libraries, drivers, and configurations. They ease deployments by making manual operating system configurations and error-prone device installations on VMs obsolete. Deployments become smooth, quick, and trouble-free.

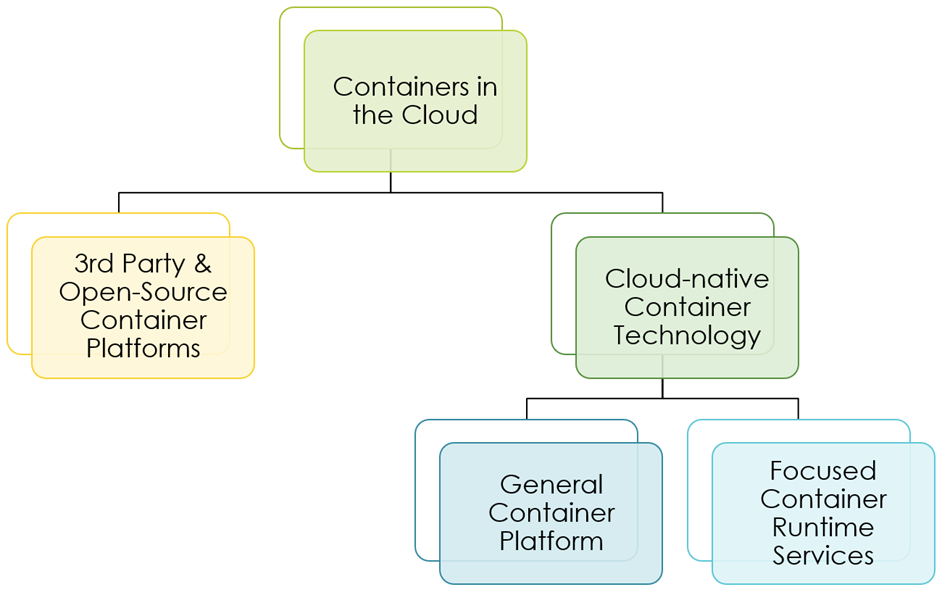

All big cloud service providers (CSPs) offer multiple cloud services for running container workloads (Figure 1). The first variant is that customers deploy an open source or third-party container platform of their choice (e.g., RedHat OpenShift or Apache Mesos). They install the platform themselves on VMs in the cloud. So, in this scenario, cloud providers are not involved in setting up and running the container platform.

Figure 1 – How to run containerized applications in the public cloud

The second option is containers on a CSP-provided managed container platform service. Examples are Azure Kubernetes Service (AKS), Google Kubernetes Engine (GKE), and Amazon Elastic Kubernetes Service. One click and a Kubernetes cluster spins up automatically.

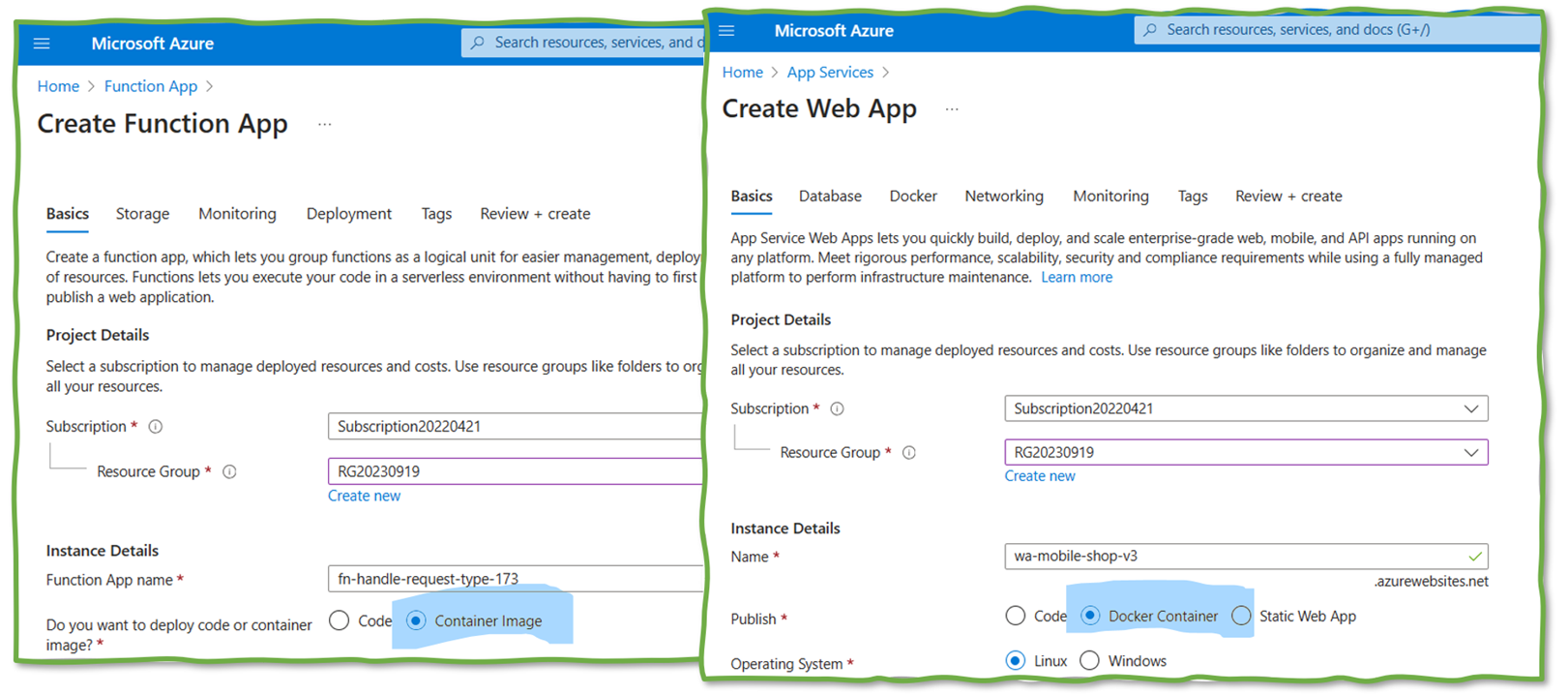

Under this model, the container and platform configurations are the customer’s responsibility, which is the main differentiator to the third option, a focused container runtime platform in the cloud, such as Azure Function Apps or Azure Web Apps (Figure 2). The latter services enable engineers to provision – besides other options – containerized applications but hide the existence of the underlying container platform. So, the challenge for security architects is securing such a bouquet of platforms running containerized applications.

Figure 2 – Deploying containers as a function or web app in Azure

Container Security Requirements

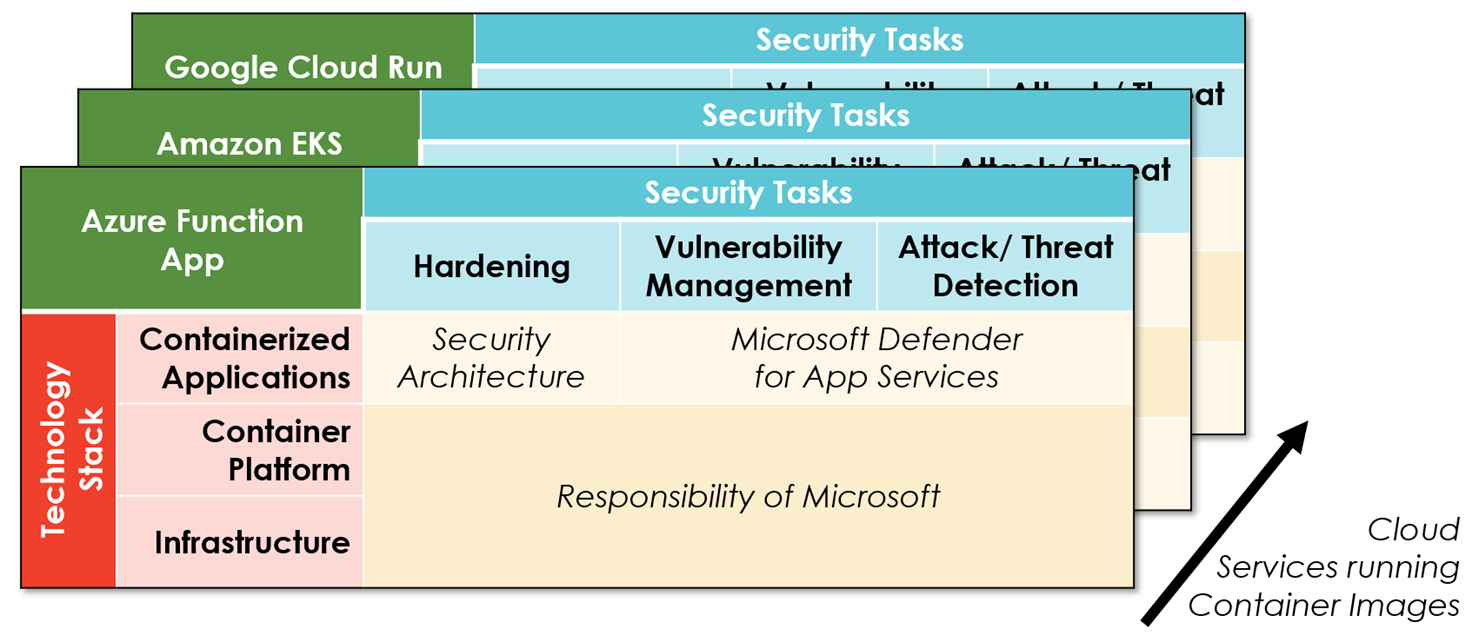

To safeguard container workloads effectively, security architects need a comprehensive understanding of the technology layer and security-related tasks. These tasks are aimed at hardening all relevant components, managing vulnerabilities, and proactively identifying and responding to threats and attacks for the complete technology stack (Figure 3).

Hardening entails configuring all components securely, particularly implementing robust access control and network designs. Vulnerability management covers identifying security weaknesses and misconfigurations in self-developed and third-party components and patching them. Even when container images are vulnerability-free at deployment time, vulnerabilities might be identified later, as the Log4j case exemplifies. Finally, hackers can attack containerized applications and the underlying container platforms. Thus, organizations must detect anomalies and suspicious behavior in their organization’s container estate (threat detection).

Figure 3: Security-related challenges for containerized workloads

Hardening, vulnerability management, and threat detection are necessities for the complete technology stack (Figure 3, left): the underlying infrastructure layer (think VMs, network, storage, or cloud tenant setups), the container platform itself (e.g., OpenShift or Amazon Elastic Kubernetes Service EKS), and, finally, for each containerized application.

Responsibilities differ for the platform layer, depending on the cloud service. For containerized runtime environments such as Azure App Functions, customers must ensure that the image is vulnerability-free and the service configuration secure; everything else is with the CSP. In contrast, running an Amazon EKS cluster means the container platform configuration is also the customer’s responsibility, though the CSP patches the platform components.

Figure 4 visualizes all container-workload-related security challenges. Security architects must understand and define how they address the security tasks (hardening, vulnerability management, attack/threat detection) for each cloud service running container images (e.g., Azure Function App, Amazon EKS, Cloud Run) for every layer of the technology stack layer (application, platform, infrastructure) – if the customer and not the CSP is responsible for the particular layer.

Figure 4 – Analyzing an organization’s coverage of container-related technologies and risks

Making Security Work for Containerized Workloads

Cloud-native security tools like Microsoft Defender or Google’s Security Center are fantastic first solutions for securing containerized workloads in a public cloud. These tools detect vulnerabilities and threats in container workloads and the underlying platforms. While certainly not free, customers can activate them with just one or two (or very few) clicks and benefit immediately from an improved security posture.

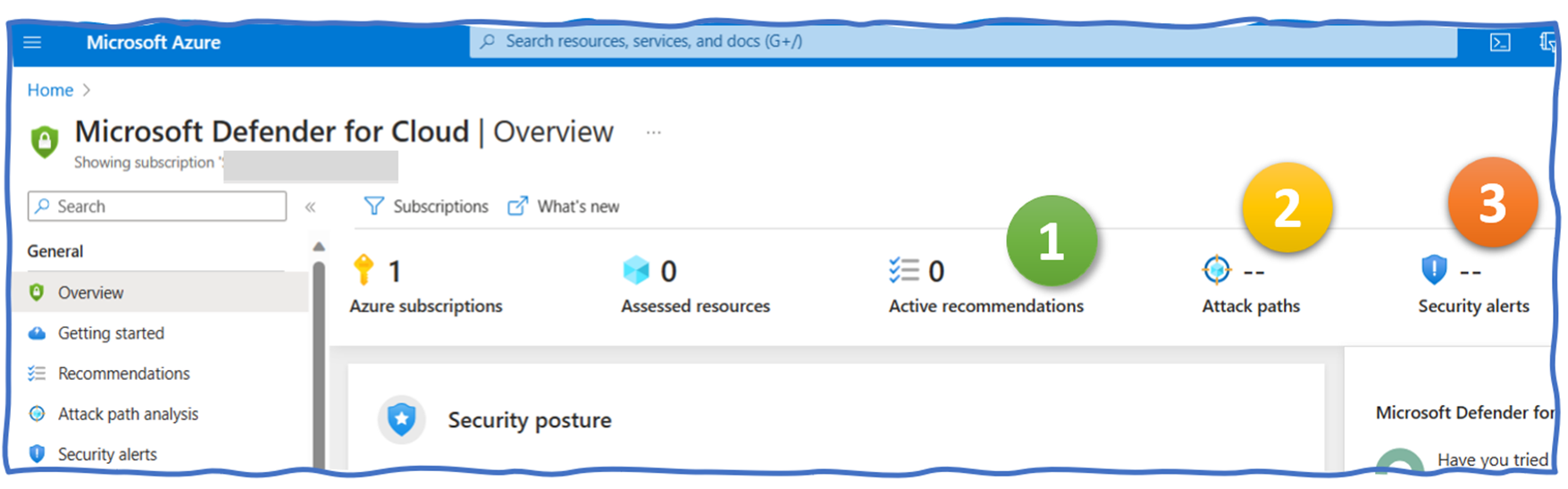

When looking at Azure Function Apps, one of the Azure services that can run containerized workloads, the adequate Microsoft product is Defender for Cloud. It provides, first, Defender Recommendations. They unveil insecure parameter and configuration choices, such as “Function App should only be accessible over HTTPS” (Figure 5, 1). These recommendations are based (partially) on the CIS benchmark. The second Defender feature, Attack Paths analysis, looks at a broader context. It identifies attack paths utilizing multiple non-perfect configuration choices for breaking into a company’s cloud estate (2). Finally, Defender Security Alerts warn specialists about ongoing activities that might be an attack (3).

Figure 5 – Microsoft Defender for Cloud

What Microsoft Defender is for Azure are Amazon GuardDuty for AWS and the Google Security Command Center for GCP: good cloud-native out-of-the-box services for securing (not only) container-based workloads. Besides these cloud-native solutions, third-party security tools are also viable options. Larger organizations should compare their features and costs with those of the cloud-native service.

The real challenge with container security starts when CISOs demand full coverage for all cloud-based container workloads. Then, security architects need to analyze each relevant cloud service separately. In such an analysis, they should give three topics special attention:

- Hardening is about defining fine-granular rules based on a concrete company’s workload and cloud setup. When are publicly exposed VMs acceptable? When do you need (or must not require) authentication for API calls? It is a much more holistic aim than just ensuring the non-existence of apparent vulnerabilities.

- For vulnerability scanning for containers or container images, two patterns exist: repository and runtime scanning. The latter examines only images in use and at risk—the benefit: no false positives caused by vulnerabilities in created but never deployed images. However, runtime scanning is not available for every service. The fallback is scanning images in repositories such as GCP Artifact Registry – a pattern that also allows integrating vulnerability scanning into CI/CD pipelines.

- Threat detection requires analyzing the activities in containers and the underlying platform for suspicious behavior and anomalies. Similarly to container-based vulnerability scanning, security architects might want to double-check whether such a feature is available (and active) for all container-based environments.

In addition, third-party solutions for container security are also certainly valid options. However, comparing their features and costs with the cloud-native solutions is a must – at least for larger organizations.

Keeping Threats Contained

The horrifying reality for CISOs about container security is that container technology has already infiltrated most IT organizations. While CISOs and CIOs typically know their large Kubernetes clusters, they are less aware of the many additional, less-known cloud services executing containers. Since hackers aim to find unprotected services (rather than attacking the secured ones), organizations must analyze where they have container workloads and ensure all are secure.