Parker Hannifin’s Elvis Leka and Josh Coe element how direct-to-chip liquid cooling, cautious routing, low-restriction couplings and superior monitoring and management may be mixed to handle excessive warmth hundreds whereas preserving rack density and system effectivity.

New AI knowledge centres require extra energy and generate extra warmth; in consequence, these new amenities want extra cooling. The irony is that AI server racks are considerably denser than customary compute racks to deal with the elevated energy necessities, so there’s much less area for cooling programs. Excessive-density knowledge centres even have many servers packed right into a restricted space, which suggests compact cooling programs are important to keep away from consuming invaluable flooring area and to permit for max rack density and future growth.

As well as, high-density AI servers create concentrated warmth zones generally known as hotspots. Successfully managing warmth created by hotspots requires putting cooling options instantly subsequent to them. Compact, industry-specific cooling programs ship thermal administration exactly the place it’s wanted, driving effectivity within the ecosystem and stopping localised temperature points, which might trigger untimely failure of apparatus.

The impression of AI

AI workloads require larger energy density per rack, with some estimates suggesting they are often 4 to 100 occasions greater than conventional knowledge centres. A single ChatGPT question makes use of about 2.9 Wh in comparison with 0.3 Wh for a Google search. Coaching AI fashions requires much more energy, with some racks consuming 80 kW or extra, and newer chips probably requiring as much as 120 kW per rack.

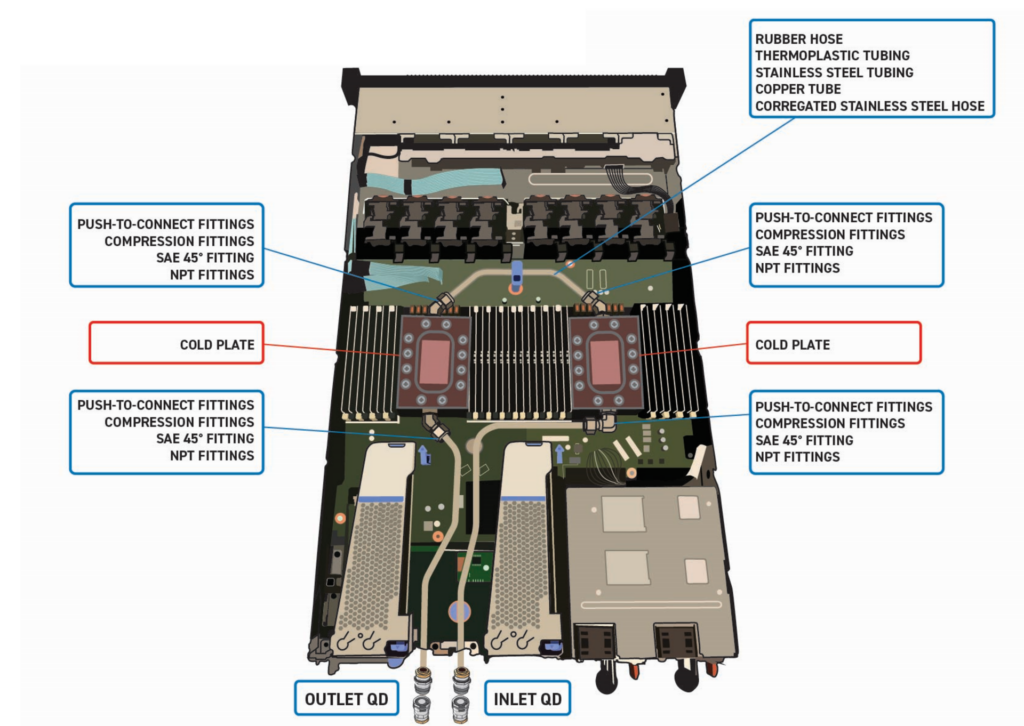

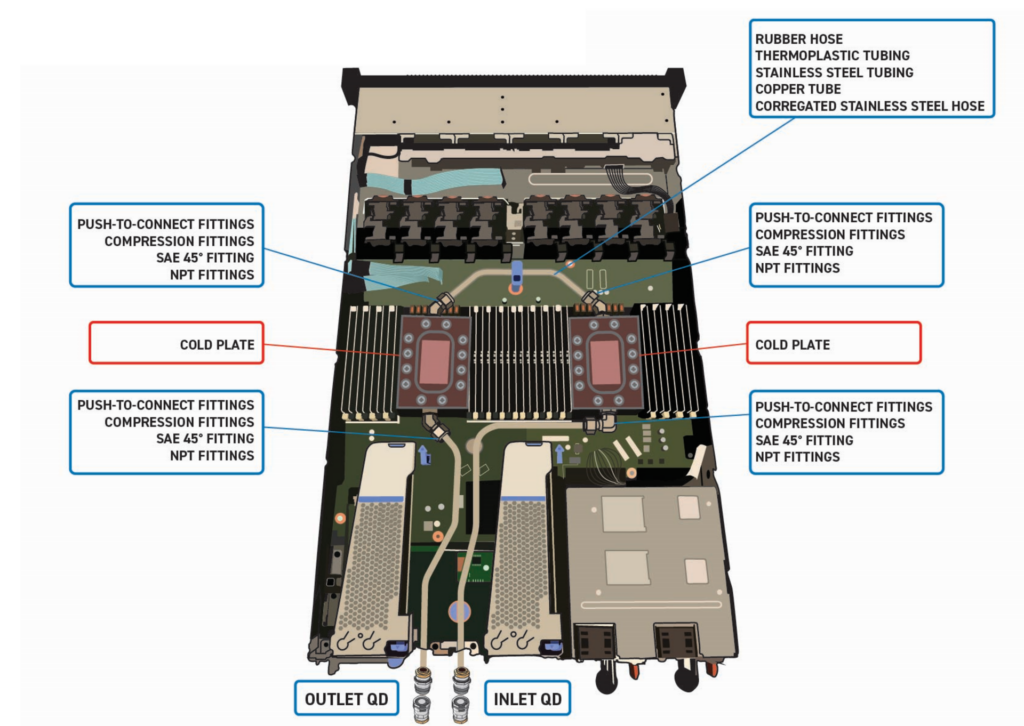

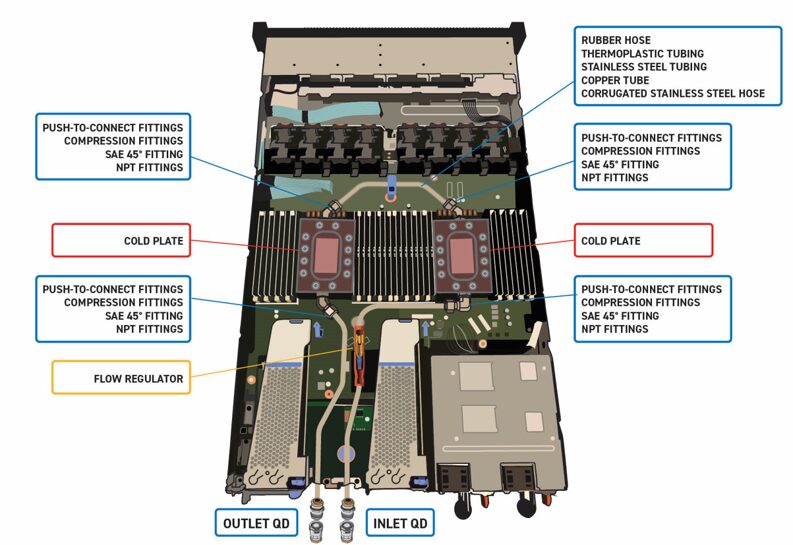

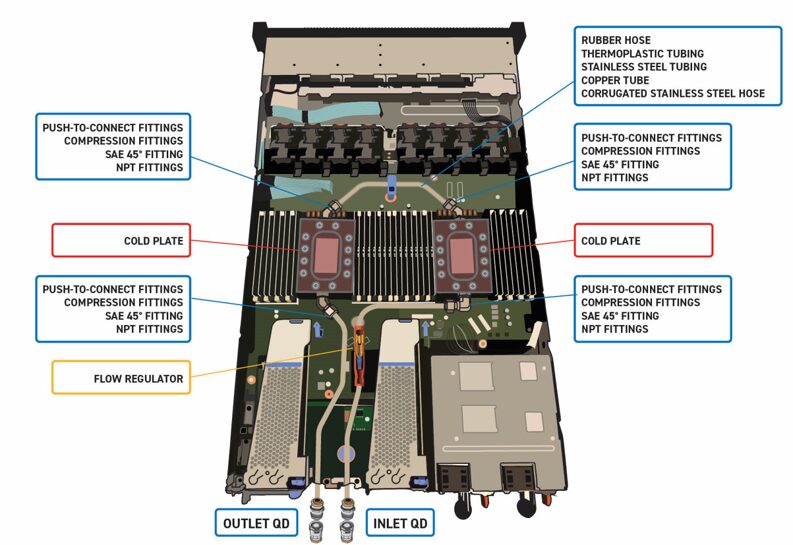

Because the thermal design energy (TDP) of the chips will increase, area constraints come up from including extra superior cooling programs. Whereas cooling programs are important for managing warmth, the extra infrastructure required – together with the chilly plates, tubing, and coolant distribution models (CDUs) – can devour invaluable area and complicate system designs.

Thermal administration is important for AI knowledge centres because of their use of extremely dense clusters of highly effective chips (like GPUs) that generate considerably extra warmth than conventional servers, pushing conventional cooling programs to their limits. This elevated warmth era requires extra highly effective, energy-intensive cooling options. The higher density of AI workloads can result in thermal spikes that overwhelm current capability, risking {hardware} degradation, efficiency throttling, and dear downtime.

The evolution of at present’s extra environment friendly knowledge centre cooling programs

To develop environment friendly cooling system designs, a stable information of fluid switch programs is required. Whereas air-cooled programs beforehand dominated the {industry} for thermal administration, energy necessities have elevated with newer chips which have more and more larger TDP.

In consequence, the {industry} is growing funding in liquid cooling choices, resembling immersion cooling and two-phase cooling. Advantages of liquid cooling embrace extra environment friendly warmth switch, decrease value and fewer environmental impression. Liquid cooling considerably reduces power use, which reduces complete working bills and creates a extra sustainable knowledge centre. As well as, there’s a discount in noise and the power to increase {hardware} life.

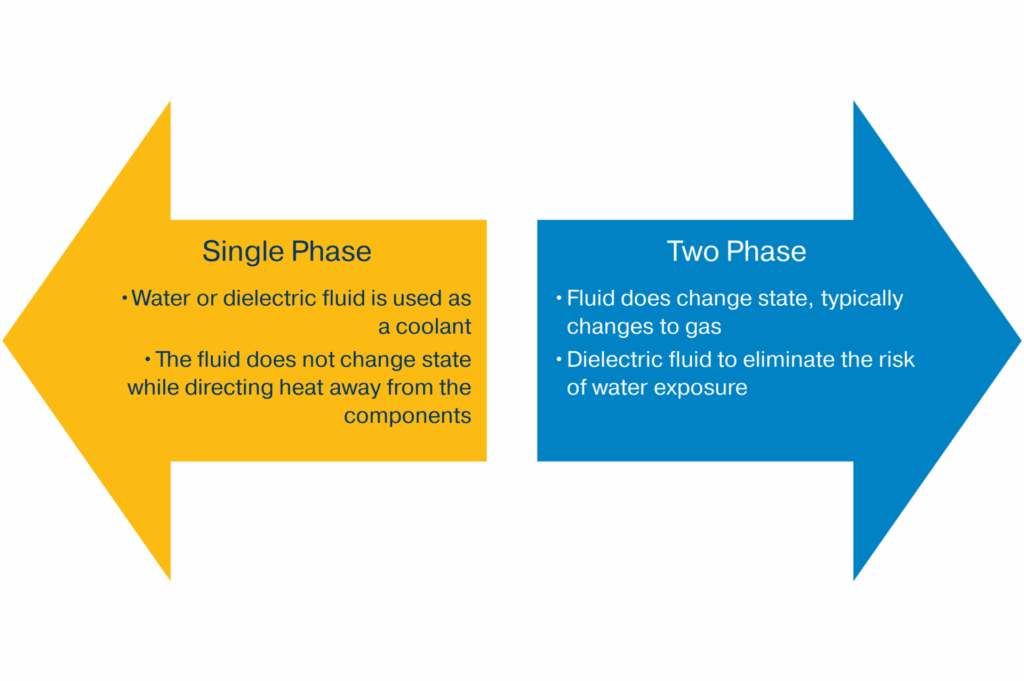

Direct-to-chip liquid cooling may be categorised into two important varieties: single-phase and two-phase. Each use a chilly plate warmth exchanger mounted instantly onto the energy-dense elements (CPU, GPU, and so forth.).

With single-phase cooling, a coolant resembling water glycol (a mix of water and propylene glycol) or de-ionised water with components that inhibit organic progress and restrict corrosion is used and circulated contained in the chilly plate by coolant distribution models (CDUs). The coolant absorbs warmth because it passes by means of the chilly plate warmth exchanger which is in direct contact with the GPUs and CPUs to advertise efficient warmth switch. This means of absorption and direct cooling is also referred to as wise warmth switch.

Though single-phase liquid cooling programs are adequate for at present’s knowledge centres, they might wrestle to satisfy the necessities of future generations of AI-rich knowledge centres, which is why two-phase choices are gaining reputation.

In two-phase cooling, warmth absorption primarily happens by means of latent warmth in the course of the part change of the refrigerant. This course of constantly cycles the refrigerant inside a sealed, closed-loop system utilizing a small pump to ship simply sufficient liquid refrigerant to the evaporator. Sometimes, a collection of a number of chilly plates is perfect to amass the warmth from the IT gear. The liquid refrigerant begins to boil and maintains a cool, uniform temperature on the floor of the chips. The 2-phase refrigerant is then transferred to a warmth exchanger the place it rejects the warmth to the ability cooling loop.

Two-phase programs are perfect for high-power electronics the place warmth hundreds have moved past what conventional air and water (single-phase) cooling programs can successfully handle. Direct-to-chip two-phase cooling is rising available in the market because of its functionality to switch excessive warmth and disperse latent warmth created by digital elements with elevated energy densities. This extremely environment friendly design simplifies plumbing and reduces the burden of the system, giving it a wonderful thermal efficiency/value ratio.

Nevertheless, direct-to-chip pumped two-phase programs do have their drawbacks, together with the truth that they typically require a bigger funding upfront and specialised coaching for upkeep technicians. There may be additionally the technical problem of designing a system that controls circulate and stress throughout cooling loops.

The necessity for extra superior monitoring and management programs

Newer two-phase liquid cooling programs require extra subtle monitoring and management programs due to their efficiency-driven, but delicate, nature, which hinges on exact management of temperature, stress, and circulate to stop overheating, leaks, and element failure. The method of fixing from liquid to vapour to soak up warmth is very environment friendly however is delicate to circulate modifications, which might trigger points like dry-out or vapour blockages if not correctly managed. That’s why these superior programs require the usage of sensors and AI/ML-driven analytics to carry out real-time situation monitoring, dynamic changes to offset workload fluctuations, and predictive upkeep.

Since effectivity is a precedence when designing a cooling system, it is very important compute the whole power utilization by taking a look at Energy Utilization Effectiveness (PUE) in any respect ranges (server blade degree, IT rack degree, and knowledge centre facility ranges). A PUE worth of 1.0 signifies that every one energy is utilized by IT gear, whereas a better PUE signifies that extra power is being wasted on cooling and energy supply. Reducing PUE values throughout all ranges helps enhance total power effectivity and sustainability.

The power PUE is the whole power utilized by all the knowledge centre (together with cooling, lighting, and energy supply) divided by the power utilized by the IT gear. IT gear PUE on the rack or server degree measures the ability utilized by the precise gear towards the whole power delivered to that gear. To get an total power utilization image, you’ll be able to multiply the PUE at every degree by the power consumption at that degree, beginning with the server blade and dealing your approach as much as the ability’s complete power consumption.

To calculate the blade PUE, divide the ability utilized by the IT gear on the blade by the ability delivered to the blade. Then multiply the blade PUE by the whole energy consumed by the IT gear on that blade to calculate the whole blade power utilization. You may comply with the identical process to calculate complete rack power utilization and complete facility power utilization.

To maximise effectivity, it is very important consider the dimensions necessities for all elements within the cooling system. Maximising the effectivity of a two-phase liquid cooling system includes exactly sizing every element to match the thermal load, minimising power consumption, and optimising warmth switch. Over-sizing elements results in wasted power and better prices, whereas under-sizing could cause efficiency points or system failure.

Minimising stress drop

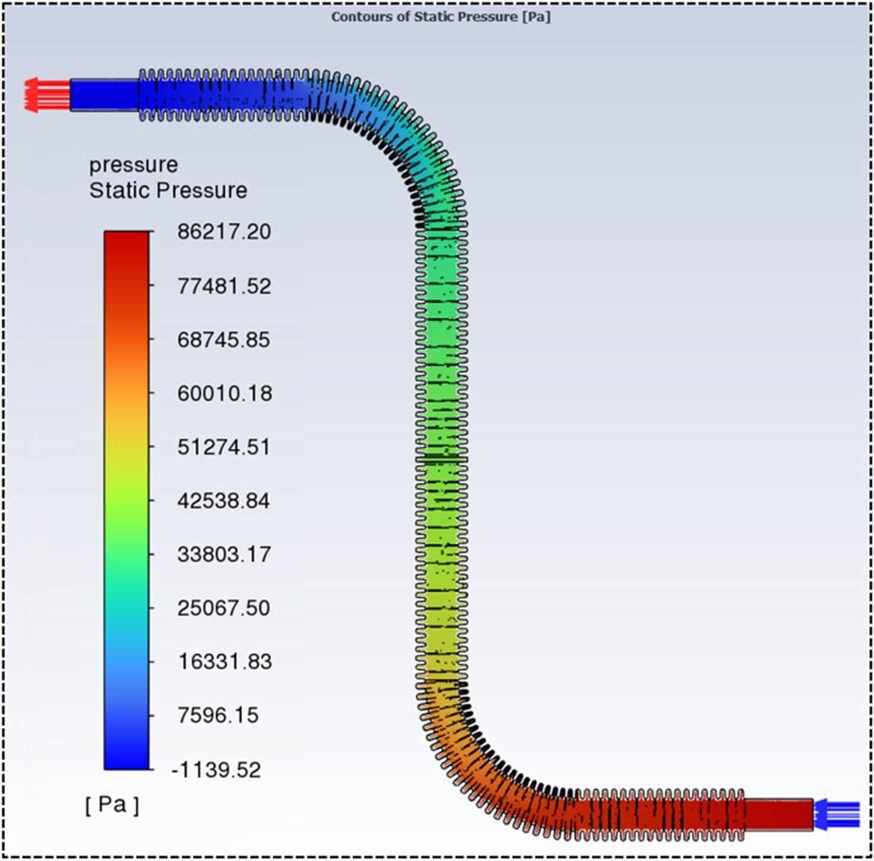

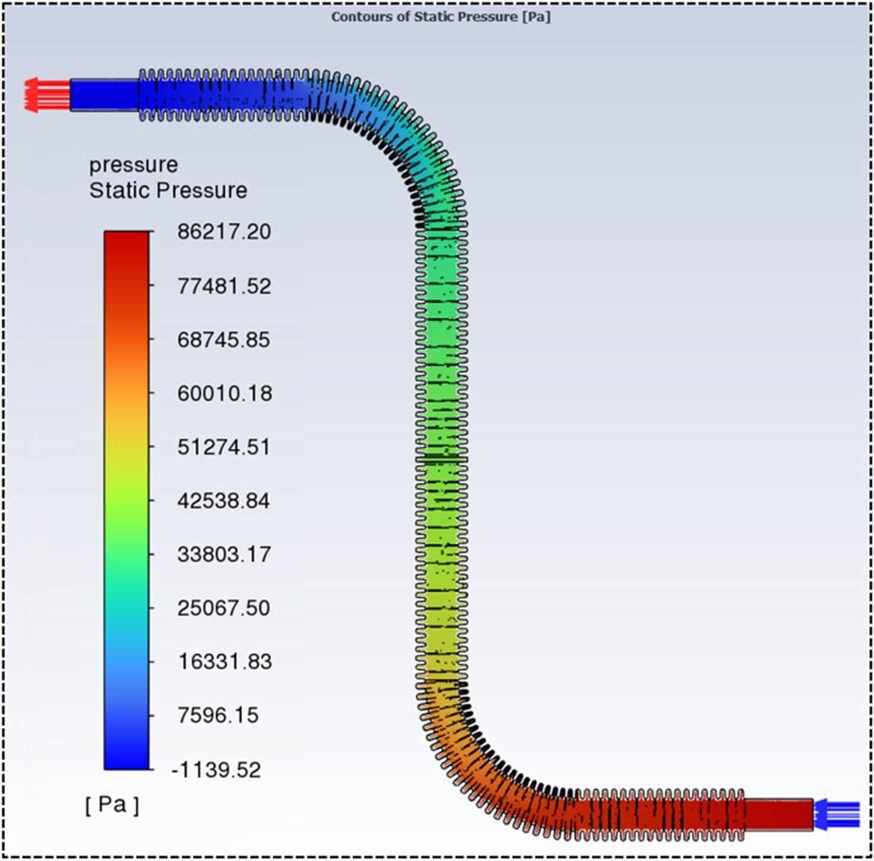

Stress drop is the lower in fluid stress because it flows by means of a system. This lower is attributable to resistance from friction, pipe fittings, and obstructions. It’s a essential think about system design, because it determines the power required to maneuver fluids and may have an effect on efficiency if not accounted for.

Extreme stress drop results in decreased system effectivity, elevated power prices, and accelerated gear put on. To beat this loss, programs should use extra power, elevating operational bills and reducing productiveness. When utilizing refrigerant because the cooling media, stress drop could cause the fluid to doubtlessly vapourise, inflicting decreased mass circulate. Moreover, excessive stress drops could cause gear injury and untimely failure. Pumps work tougher and devour extra energy on account of stress drop.

Among the extra widespread sources of stress drop in knowledge centre liquid cooling programs embrace inefficient routings, restricted bore connections, hoses, fittings and chilly plates, in addition to inside obstructions resembling deposits or grime and circulate restrictors. The overall stress drop is the sum of the stress drops from all particular person elements within the cooling loop.

Trying on the potential impression of varied elements on complete stress drop, it’s straightforward to see how the buildup of varied components can create substantial power drain. Contemplate the next doable impacts:

- Hoses, tubing, and fittings – The inner design and connections of hoses, tubes, and fittings can create resistance to circulate. Guarantee all element supplies are suitable with the coolant, working temperatures, and pressures of the system to stop corrosion and keep structural integrity.

- Chilly plates – The fluid should go by means of the interior channels of the chilly plates, which might trigger a major stress drop relying on their design.

- Connectors – Fast-disconnects and different couplings are designed to be dependable and straightforward to make use of; nevertheless, the interior valve mechanism makes quick-disconnects one of many important contributors to stress drop in a system. That’s why corporations like Parker manufacture flat-face, dry-break couplings that function excessive circulate charges and low stress drop.

- Hose and tubing routing – The general format and dimensions of the fluid switch system contribute to the whole stress drop.

- Gasketing and seals – In some elements, the gaskets can create a mechanical restrict that ends in a stress drop.

- Manifold concerns – Correctly designed manifolds guarantee constant circulate, cut back pump load, and keep cooling effectivity throughout the rack. Manifolds must be designed with parallel circulate paths to distribute coolant evenly and cut back the general stress drop, making certain all linked servers obtain constant circulate.

Methods for minimising stress drop contain optimising the system’s design, element choice (together with hose and tube sizing, in addition to choosing the proper materials), and upkeep practices. Listed here are a couple of useful ideas to bear in mind:

- Use parallel circulate configuration – Arranging the chilly plates or cooling modules in a parallel circuit distributes the fluid into a number of paths, considerably lowering the stress drop in comparison with a collection configuration. This design additionally prevents downstream elements from being preheated by the upstream fluid.

- Minimise bends and shorten pipe size – Extreme 90-degree bends, twists, and lengthy pipe runs create turbulence and friction, resulting in important stress loss. Design piping layouts to be as compact and straight as doable.

- Enhance hose and tube diameter – Bigger diameter hoses and tubing provide much less resistance to fluid circulate, lowering friction and stress drop. Nevertheless, this have to be balanced with area and value constraints.

- Create clean inside surfaces – Tough inside pipe surfaces enhance friction. Utilizing pipes with polished, clean inside finishes minimises drag on the fluid.

- Use low-viscosity coolant – The viscosity of the cooling fluid instantly impacts friction. Utilizing a fluid with a decrease viscosity will cut back the resistance to circulate and the ensuing stress drop.

- Specify low-restriction fittings and quick-disconnects – Generic quick-disconnects could cause pointless stress loss. As an alternative, use specialised, excessive circulate quick-disconnect fittings that function superior inside designs and optimised circulate paths to minimise restriction.

- Cut back elements within the circulate path – Each valve, circulate meter, and coupling provides resistance. Minimise the variety of these elements to create a extra streamlined circulate path.

A well-designed system with low stress drop prevents localised high-pressure areas that may result in leaks or uneven cooling. That is particularly important for high-density servers, the place one element failing may impression others. As well as, decreased stress drop ensures the coolant may be distributed effectively to all servers. This allows the cooling system to successfully handle the warmth generated by high-performance computing (HPC) and AI workloads. A dependable system with fewer pressure-related points requires much less frequent upkeep, resulting in decrease system operation prices.

Conclusion

Excessive-density AI knowledge centres require extra modern cooling methods due to their considerably larger energy necessities. On the similar time, these newer cooling options have to be compact in nature because of larger rack and chip densities and the addition of chilly plates.

Through the years, cooling applied sciences have advanced to higher meet elevated power calls for – shifting from air cooling to single-phase liquid cooling to single-phase and air hybrid cooling to two-phase liquid cooling methods.

Fluid efficiency is improved with fixed circulate charges, however stress drops threaten a system’s means to keep up a constant circulate. Design configuration and materials choice, together with the kind of connections and sealants chosen, all impression stress drop. There are a number of proven-successful methods for lowering stress drop that are obligatory to extend power effectivity and lengthen the lifetime of system elements.

Getting all the extra cooling elements, together with the servers’ energy and knowledge cabling, to slot in the identical, or an excellent smaller, footprint has turn out to be exponentially extra advanced. That’s why exact planning is critical when designing at present’s newest cooling programs. System-built options utilising compact designs and optimisation of circulate charges and routings inevitably result in improved element lifespans and decreased total system working prices.