Caltech neuroscientists are making promising progress towards exhibiting {that a} gadget often called a mind–machine interface (BMI), which they developed to implant into the brains of sufferers who’ve misplaced the flexibility to talk, might someday assist all such sufferers talk by merely pondering and never talking or miming.

In 2022, the staff reported that their BMI had been efficiently implanted and utilized by a affected person to speak unstated phrases. Now, reporting within the journal Nature Human Behaviour, the scientists have proven that the BMI has labored efficiently in a second human affected person.

“We’re very keen about these new findings,” says Richard Andersen, the James G. Boswell Professor of Neuroscience and director and management chair of the Tianqiao and Chrissy Chen Mind–Machine Interface Middle at Caltech, who described the sooner analysis in a latest public lecture at Caltech. “We reproduced the ends in a second particular person, which implies that this isn’t depending on the particulars of 1 particular person’s mind or the place precisely their implant landed. That is certainly extra prone to maintain up within the bigger inhabitants.”

BMIs are being developed and examined to assist sufferers in a variety of methods. For instance, some work has targeted on growing BMIs that may management robotic arms or arms. Different teams have had success at predicting individuals’ speech by analyzing mind alerts recorded from motor areas when a participant whispered or mimed phrases.

However predicting what any person is pondering—detecting their inside dialogue—is way more tough, because it doesn’t contain any motion, explains Sarah Wandelt, Ph.D., lead creator on the brand new paper, who’s now a neural engineer on the Feinstein Institutes for Medical Analysis in Manhasset, New York.

The brand new analysis is probably the most correct but at predicting inside phrases. On this case, mind alerts have been recorded from single neurons in a mind space referred to as the supramarginal gyrus situated within the posterior parietal cortex (PPC). The researchers had present in a previous study that this mind space represents spoken phrases.

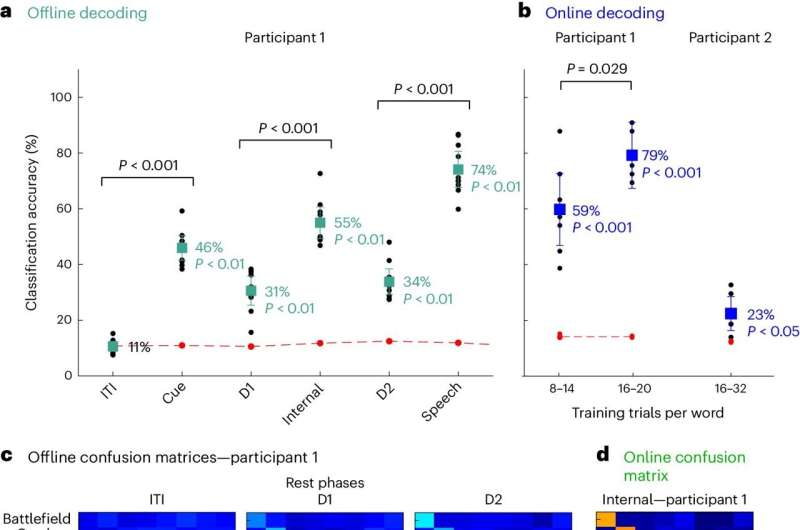

Within the present research, the researchers first skilled the BMI gadget to acknowledge the mind patterns produced when sure phrases have been spoken internally, or thought, by two tetraplegic individuals. This coaching interval took solely about quarter-hour. The researchers then flashed a phrase on a display screen and requested the participant to “say” the phrase internally. The outcomes confirmed that the BMI algorithms have been capable of predict the eight phrases examined, together with two nonsensical phrases, with a median of 79% and 23% accuracy for the 2 individuals, respectively.

“Since we have been capable of finding these alerts on this specific mind area, the PPC, in a second participant, we will now make certain that this space incorporates these speech alerts,” says David Bjanes, a postdoctoral scholar analysis affiliate in biology and organic engineering and an creator of the brand new paper. “The PPC encodes a big number of completely different activity variables. You could possibly think about that some phrases could possibly be tied to different variables within the mind for one particular person. The probability of that being true for 2 folks is way, a lot decrease.”

The work remains to be preliminary however might assist sufferers with mind accidents, paralysis, or illnesses, comparable to amyotrophic lateral sclerosis (ALS), that have an effect on speech. “Neurological problems can result in full paralysis of voluntary muscle tissue, leading to sufferers being unable to talk or transfer, however they’re nonetheless capable of assume and motive. For that inhabitants, an inside speech BMI can be extremely useful,” Wandelt says.

The researchers level out that the BMIs can’t be used to learn folks’s minds; the gadget would should be skilled in every particular person’s mind individually, and so they solely work when an individual focuses on the actual phrase.

Extra authors on the paper, “Illustration of inside speech by single neurons in human supramarginal gyrus,” embrace Kelsie Pejsa, a lab and medical research supervisor at Caltech, and Brian Lee and Charles Liu, each visiting associates in biology and organic engineering from the Keck College of Medication of USC. Bjanes and Liu are additionally affiliated with the Rancho Los Amigos Nationwide Rehabilitation Middle in Downey, California.

Extra info:

Sarah Ok. Wandelt et al, Illustration of inside speech by single neurons in human supramarginal gyrus, Nature Human Behaviour (2024). DOI: 10.1038/s41562-024-01867-y

Quotation:

Mind-machine interface gadget predicts inside speech in second affected person (2024, Could 15)

retrieved 16 Could 2024

from https://techxplore.com/information/2024-05-brain-machine-interface-device-internal.html

This doc is topic to copyright. Other than any truthful dealing for the aim of personal research or analysis, no

half could also be reproduced with out the written permission. The content material is supplied for info functions solely.