Be a part of our day by day and weekly newsletters for the newest updates and unique content material on industry-leading AI protection. Study Extra

Getting enterprise information into massive language fashions (LLMs) is a important activity for enabling the success of enterprise AI deployments.

That’s the place retrieval augmented era (RAG) suits in, which is an space the place many distributors have supplied varied options. As we speak at AWS re:invent 2024 the corporate introduced a collection of recent companies and updates designed to assist make it simpler for enterprises to get each structured and unstructured information into RAG pipelines. Making structured information accessible for RAG requires extra than simply trying up a single row in a desk. It entails translating pure language queries into complicated SQL queries to filter, be a part of tables and mixture information.The challenges are additional compounded for unstructured information, the place by definition there isn’t a construction for the information.

To assist remedy these challenges AWS introduced new companies for structured information retrieval help, ETL (extract, remodel and cargo) for unstructured information, information automation and information base help.

“Retrieval augmented era (RAG) is a highly regarded approach for customizing your information, however one of many challenges with retrieval augmented era is it’s traditionally been principally for textual content information,” Swami Sivasubramanian, VP of AI and Information at AWS, advised VentureBeat. ” And if you happen to see enterprises, a lot of the information, particularly operational, is sitting in information lakes and information warehouses, and that has by no means been prepared for RAG, per se.”

Enhancing structured information retrieval help with Amazon Bedrock Data Bases

Why isn’t structured information prepared for RAG? Sivasubramanian supplied a number of situations.

“To construct a extremely correct, safe system, you’ve received to truly perceive the schema, construct a customized schema embedding, after which truly perceive the historic question log, after which sustain with the modifications and schemas,” Sivasubramanian mentioned.

Throughout his keynote at re:invent Sivasubramanian defined that the Amazon Bedrock Data Bases service is a completely managed RAG functionality that permits enterprises to customise responses with contextual and related information.

“It automates the whole RAG workflow, eradicating the necessity so that you can write customized code to combine your information sources and handle queries,” he mentioned.

With structured information retrieval help in Amazon Bedrock Data Bases, Sivasubramanian mentioned that AWS is offering a completely managed RAG answer. It allows enterprises to natively question all their structured information to generate outcomes for generative AI purposes. Data Bases will mechanically generate and execute the SQL queries to retrieve enterprise information after which enrich the mannequin’s responses.

“The cool factor is, it additionally adjusts to your schema and information, and it learns out of your question patterns and offers the customization choices for enhanced accuracy,” he mentioned. “Now with the power to simply entry structured information on your RAG, you’ll generate extra highly effective and clever gen AI purposes within the enterprise.”

GraphRAG: Bringing all of it collectively in a information graph

One other key enterprise AI problem that AWS is trying to remedy for RAG helps to enhance accuracy, with extra information sources. That’s the problem that the brand new GraphRAG functionality goals to resolve.

“One of many huge challenges in enterprises is to piece aside distinct items of information and present how they’re linked so as to construct explainable RAG methods,” Sivasubramanian mentioned. “That is the place information graphs are tremendous necessary.”

Sivasubramanian defined that information graphs create relationships throughout a number of information sources by connecting totally different items of knowledge.

“When these relationships are transformed into graph embeddings on your gen AI purposes, the system can simply traverse this graph and retrieve these connections to collect a holistic view of your buyer information,” he mentioned.

The brand new GraphRAG capabilities in Amazon Bedrock Data Bases mechanically generate graphs utilizing the Amazon Neptune graph database service. Sivasubramanian famous that itlinks the connection between varied information sources, creating extra complete Gen AI purposes with out the necessity for any graph experience.

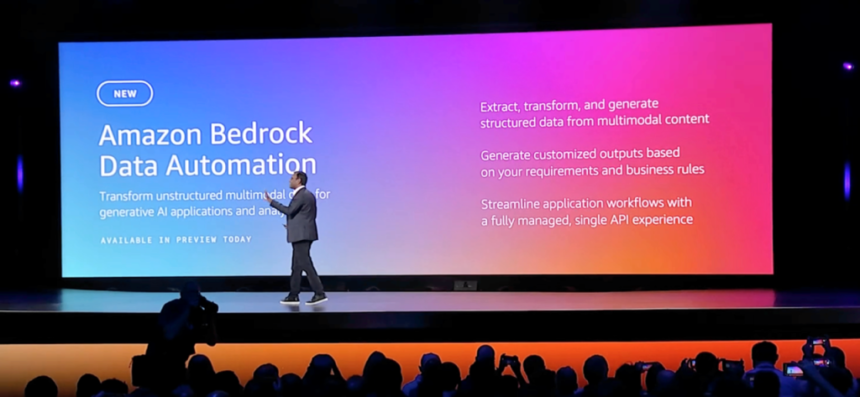

Tackling the challenges of unstructured information with Amazon Bedrock Information Automation

One other important enterprise information problem is the difficulty of unstructured information. It’s a problem that many distributors try to resolve, together with startups like Anomalo.

When information, be it a pdf, audio or video file must be listed for RAG use circumstances, having some type of understanding of what’s within the information is essential to creating the information helpful.

“Sadly, unstructured information is troublesome to extract and it must be processed and reworked to make it prepared,” Sivasubramanian mentioned.

The brand new Amazon Bedrock Information Automation expertise is AWS’ reply to that problem. Sivasubramanian defined that the characteristic will mechanically remodel unstructured multi mannequin content material into structured information to energy gen AI purposes,

“I like to consider this as a gen AI powered ETL [Extract,Transform and Load] for unstructured information,” he mentioned.

Amazon Bedrock Information Automation will mechanically extract, remodel and course of an enterprise’s multimodal content material at scale. He famous that with a single API, an enterprise can generate customized outputs, aligned to information schemas and parse multimodal content material for genAI purposes.

“With these updates, we’re empowering you to harness all your information to construct contextually extra related gen AI purposes,” he mentioned.

Source link