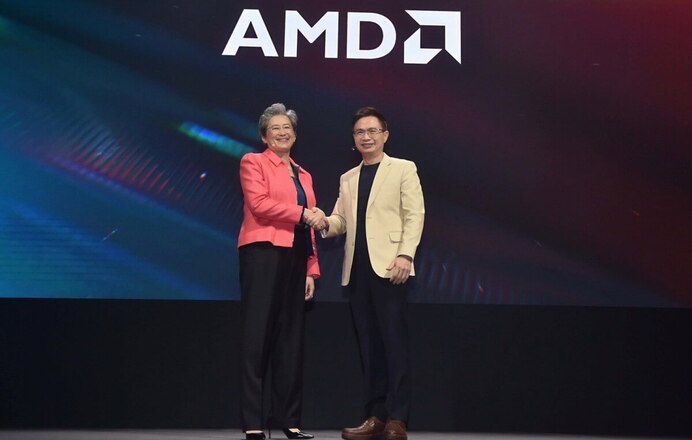

At Computex 2024, AMD (NASDAQ: AMD) made a major influence with its announcement concerning the enlargement of the AMD Intuition accelerator household. The keynote, delivered by Chair and CEO Dr. Lisa Su, highlighted a complete multiyear roadmap that guarantees to ship industry-leading AI efficiency and reminiscence capabilities on an annual foundation.

The newly up to date roadmap is ready to kick off with the AMD Intuition MI325X accelerator, which can be accessible within the fourth quarter of 2024. This can be adopted by the AMD Intuition MI350 sequence, which is slated for launch in 2025. The MI350 sequence will characteristic the brand new AMD CDNA 4 structure, boasting a outstanding 35-fold enhance in AI inference efficiency in comparison with the earlier MI300 sequence with AMD CDNA 3 structure.

By 2026, AMD plans to roll out the MI400 sequence, powered by the forthcoming AMD CDNA ‘Subsequent’ structure, additional enhancing its efficiency capabilities.

Brad McCredie, Company Vice President of Knowledge Middle Accelerated Compute at AMD, emphasised the strong adoption of the AMD Intuition MI300X accelerators by main {industry} gamers similar to Microsoft Azure, Meta, Dell Applied sciences, HPE, and Lenovo. He attributed this adoption to the distinctive efficiency and worth proposition supplied by these accelerators. “With our up to date annual cadence of merchandise, we’re relentless in our tempo of innovation, offering the management capabilities and efficiency the AI {industry} and our clients anticipate to drive the following evolution of information heart AI coaching and inference,” mentioned McCredie.

AMD’s AI Software program Ecosystem

At the side of {hardware} developments, AMD’s AI software program ecosystem would even be maturing. The AMD ROCm 6 open software program stack continues to evolve, enhancing the efficiency of the AMD Intuition MI300X accelerators. On servers outfitted with eight MI300X accelerators and ROCm 6 working Meta Llama-3 70B, clients can obtain important enchancment in inference efficiency and token technology in line with AMD. Equally, a single MI300X accelerator with ROCm 6 would provide the very best efficiency on Mistral-7B. Moreover, Hugging Face, one of many largest repositories for AI fashions, is now testing 700,000 of their fashions nightly to make sure compatibility with AMD Intuition MI300X accelerators. AMD can also be persevering with its work on integrating with standard AI frameworks similar to PyTorch, TensorFlow, and JAX.

Through the keynote, AMD revealed particulars of its new accelerators and the annual cadence roadmap. The brand new AMD Intuition MI325X accelerator will characteristic 288GB of HBM3E reminiscence and 6 terabytes per second of reminiscence bandwidth, using the identical Common Baseboard server design because the MI300 sequence. This mannequin can be usually accessible in This fall 2024, promising industry-leading reminiscence capability and bandwidth.

The AMD Intuition MI350X accelerator, the primary within the MI350 sequence, can be primarily based on the AMD CDNA 4 structure and accessible in 2025. It is going to additionally use the Common Baseboard server design and be constructed utilizing superior 3nm course of know-how. Supporting the FP4 and FP6 AI datatypes, it’ll include as much as 288 GB of HBM3E reminiscence.

A Look Ahead to 2026

Looking forward to 2026, the AMD Intuition MI400 sequence can be powered by the AMD CDNA ‘Subsequent’ structure, offering cutting-edge options and capabilities to boost efficiency and effectivity for each inference and large-scale AI coaching.

AMD additionally highlighted the rising demand for its MI300X accelerators, with quite a few companions and clients integrating them into their AI workloads. Microsoft Azure is utilizing these accelerators for Azure OpenAI companies and the brand new Azure ND MI300X V5 digital machines. Dell Applied sciences is incorporating them into the PowerEdge XE9680 for enterprise AI workloads. Supermicro is offering a number of options with AMD Intuition accelerators, whereas Lenovo is utilizing them to energy Hybrid AI innovation with the ThinkSystem SR685a V3. HPE is leveraging the accelerators to spice up AI workloads within the HPE Cray XD675.

This bold roadmap would underscore AMD’s dedication to sustaining its management in AI efficiency and reminiscence capabilities, making certain that its accelerators proceed to fulfill the evolving calls for of the AI {industry}. The annual cadence of latest merchandise would replicate AMD’s relentless drive for innovation, aiming to propel the event of next-generation AI fashions and redefine the requirements for knowledge heart AI coaching and inference.