Researchers at UNIST have developed an AI expertise able to reconstructing three-dimensional (3D) representations of unfamiliar objects manipulated with each palms, in addition to simulated surgical scenes involving intertwined palms and medical devices. This development permits extremely correct augmented actuality (AR) visualizations, additional enhancing real-time interplay capabilities.

Led by Professor Seungryul Baek of the UNIST Graduate Faculty of Synthetic Intelligence, the group launched Bimanual Interplay 3D Gaussian Splatting (BIGS), an modern AI mannequin that may visualize complicated interactions between palms and objects in 3D utilizing solely a single RGB video enter.

This expertise permits for the real-time reconstruction of intricate hand-object dynamics, even when the objects are unfamiliar or partially obscured. The examine is published on the arXiv preprint server.

Conventional approaches on this area have been restricted to recognizing just one hand at a time or responding solely to pre-scanned objects, proscribing their applicability in reasonable AR and VR environments.

Against this, BIGS can reliably predict full object and hand shapes, even in situations the place elements are hidden or occluded, and may accomplish that with out the necessity for depth sensors or a number of cameras—relying solely on a single RGB digicam.

The core of this AI mannequin is predicated on 3D Gaussian Splatting, a method that represents object shapes as a cloud of factors with clean Gaussian distributions.

In contrast to level cloud strategies that produce sharp boundaries, Gaussian Splatting permits pure reconstruction of contact surfaces and sophisticated interactions.

The mannequin additional addresses occlusion challenges by aligning a number of hand situations to a canonical Gaussian construction and employs a pre-trained diffusion mannequin for rating distillation sampling (SDS), permitting it to precisely reconstruct unseen surfaces, together with the backs of objects.

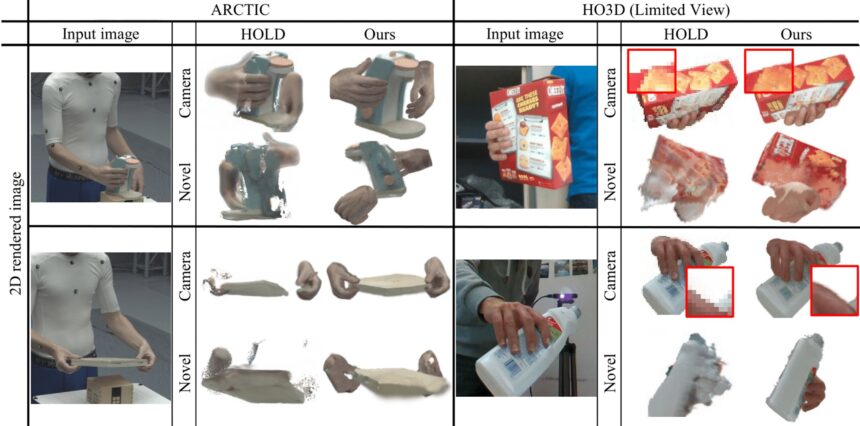

In depth experiments using worldwide datasets comparable to ARCTIC and HO3Dv3 demonstrated that BIGS outperforms current applied sciences in precisely capturing hand postures, object shapes, contact interactions, and rendering high quality. These capabilities maintain vital promise for purposes in digital and augmented actuality, robotic management, and distant surgical simulations.

This analysis was performed with contributions from first creator Jeongwan On, together with Kyeonghwan Gwak, Gunyoung Kang, Junuk Cha, Soohyun Hwang, and Hyein Hwang.

Professor Baek remarked, “This development is predicted to facilitate real-time interplay reconstruction in varied fields, together with VR, AR, robotic management, and distant surgical coaching.”

Extra data:

Jeongwan On et al, BIGS: Bimanual Class-agnostic Interplay Reconstruction from Monocular Movies by way of 3D Gaussian Splatting, arXiv (2025). DOI: 10.48550/arxiv.2504.09097

Quotation:

AI expertise reconstructs 3D hand-object interactions from video, even when parts are obscured (2025, June 13)

retrieved 14 June 2025

from https://techxplore.com/information/2025-06-ai-technology-reconstructs-3d-interactions.html

This doc is topic to copyright. Aside from any truthful dealing for the aim of personal examine or analysis, no

half could also be reproduced with out the written permission. The content material is supplied for data functions solely.