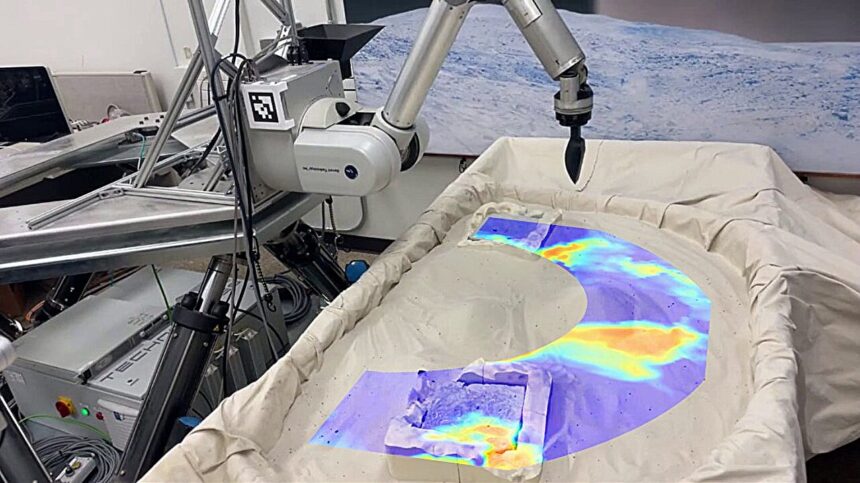

Extraterrestrial landers despatched to collect samples from the floor of distant moons and planets have restricted time and battery energy to finish their mission. Aerospace and laptop science engineering researchers at The Grainger School of Engineering, College of Illinois Urbana-Champaign educated a mannequin to autonomously assess and scoop shortly, then watched it exhibit its ability on a robotic at a NASA facility.

Aerospace Ph.D. pupil Pranay Thangeda mentioned they educated their robotic lander arm to gather scooping knowledge on a wide range of supplies, from sand to rocks, leading to a database of 6,700 factors of information. The 2 terrains in NASA’s Ocean World Lander Autonomy Testbed on the Jet Propulsion Laboratory had been model new to the mannequin that operated the JPL robotic arm remotely.

The research, “Studying and Autonomy for Extraterrestrial Terrain Sampling: An Expertise Report from OWLAT Deployment,” was printed within the AIAA Scitech Forum.

“We simply had a community hyperlink over the web,” Thangeda mentioned. “I linked to the take a look at mattress at JPL and acquired a picture from their robotic arm’s digicam. I ran it by way of my mannequin in actual time. The mannequin selected to begin with the rock-like materials and realized on its first attempt that it was an unscoopable materials.”

Primarily based on what it realized from the picture and that first try, the robotic arm moved to a different extra probably space and efficiently scooped the opposite terrain, a finer grain materials. As a result of one of many mission necessities is that the robotic scoop a selected quantity of fabric, the JPL staff measured the amount of every scoop till the robotic completed scooping the total quantity.

Thangeda mentioned that though this work was initially motivated by exploration of ocean worlds, their mannequin can be utilized on any floor.

“Normally, whenever you practice fashions based mostly on knowledge, they solely work on the identical knowledge distribution. The fantastic thing about our methodology is that we did not have to vary something to work on NASA’s take a look at mattress as a result of, in our methodology, we’re adapting on-line.

“Regardless that we by no means noticed any of the terrains on the NASA take a look at mattress, with none high-quality tuning on their knowledge, we managed to deploy the mannequin educated right here instantly over there, and the mannequin deployment occurred remotely—precisely what autonomous robotic landers will do when deployed on a brand new floor in house.”

Thangeda’s adviser, Melkior Ornik, is the lead on one in all 4 tasks fixing totally different issues. The one commonality between them is they’re all part of the Europa program and use this Lander as a take a look at mattress to discover totally different issues.

“We had been one of many first to exhibit one thing significant on their platform designed to imitate a Europa floor. It was nice to lastly see one thing you labored on for months being deployed on an actual, high-fidelity platform. It was cool to see the mannequin being examined on a totally totally different terrain and a totally totally different platform robotic that we would by no means educated on. It was a lift of confidence in our mannequin and our strategy.”

Thangeda mentioned the suggestions they obtained from the JPL staff was good, too. “They had been completely satisfied that we had been in a position to deploy the mannequin with out a number of modifications. There have been some points once we had been simply beginning out, however I realized it was as a result of we had been the primary to attempt to deploy a mannequin on their platform, so it was community points and a few easy bugs within the software program that they needed to repair.

“As soon as we acquired it working, folks had been shocked that it was in a position to study inside like one or two samples. Some did not even imagine it till they had been proven the precise outcomes and methodology.”

Thangeda mentioned one of many important points he and his staff needed to overcome was to convey their setup on parity with NASA’s setup.

“Our mannequin was educated on a digicam in a selected location with a selected formed scoop. The situation and the form of the news had been two issues we needed to tackle. To verify their robotic had the very same scoop form, we despatched them a CAD design and so they 3D printed it and connected it to their robotic.

“For the digicam, we took their RGB-D level cloud data and reprojected it in actual time to a distinct viewpoint, in order that it matched what we had in our robotic earlier than we despatched it to the mannequin. That manner, what the mannequin noticed was the same viewpoint to what it noticed throughout coaching.”

Thangeda mentioned they plan to construct on this analysis for extra autonomous excavation and automating development work like digging a canal. It is a lot simpler for people to do this stuff. It is onerous for a mannequin to study to do this stuff autonomously, as a result of the interactions are very nuanced.

Extra data:

Pranay Thangeda et al, Studying and Autonomy for Extraterrestrial Terrain Sampling: An Expertise Report from OWLAT Deployment, AIAA SCITECH 2024 Discussion board (2024). DOI: 10.2514/6.2024-1962

Quotation:

AI mannequin masters new terrain at NASA facility one scoop at a time (2025, February 7)

retrieved 7 February 2025

from https://techxplore.com/information/2025-02-ai-masters-terrain-nasa-facility.html

This doc is topic to copyright. Other than any honest dealing for the aim of personal research or analysis, no

half could also be reproduced with out the written permission. The content material is supplied for data functions solely.