A paper revealed in Proceedings of the thirty seventh Annual ACM Symposium on Person Interface Software program and Know-how, by researchers in Carnegie Mellon College’s Human-Pc Interplay Institute, introduces EgoTouch, a instrument that makes use of synthetic intelligence to regulate AR/VR interfaces by touching the pores and skin with a finger.

The staff needed to finally design a management that would supply tactile suggestions utilizing solely the sensors that include a normal AR/VR headset.

OmniTouch, a earlier methodology developed by Chris Harrison, an affiliate professor within the HCII and director of the Future Interfaces Group, obtained shut. However that methodology required a particular, clunky, depth-sensing digicam. Vimal Mollyn, a Ph.D. pupil suggested by Harrison, had the concept to make use of a machine studying algorithm to coach regular cameras to acknowledge touching.

“Attempt taking your finger and see what occurs once you contact your pores and skin with it. You may discover that there are these shadows and native pores and skin deformations that solely happen once you’re touching the pores and skin,” Mollyn mentioned. “If we are able to see these, then we are able to prepare a machine studying mannequin to do the identical, and that is basically what we did.”

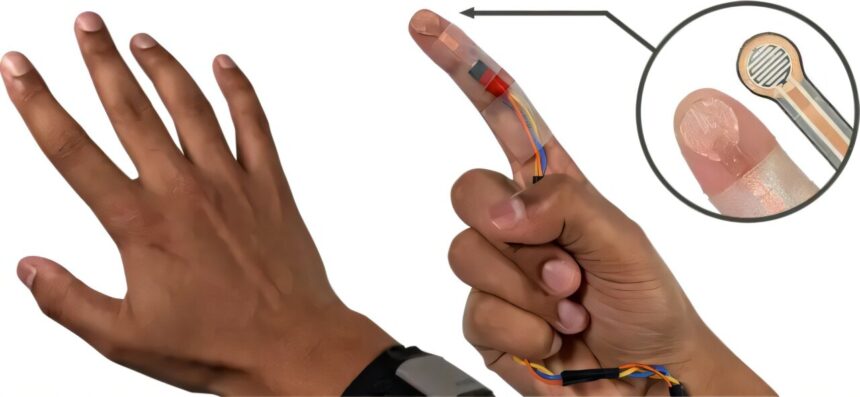

Mollyn collected the info for EgoTouch by utilizing a customized contact sensor that ran alongside the underside of the index finger and the palm. The sensor collected information on several types of contact at completely different forces whereas staying invisible to the digicam. The mannequin then realized to correlate the visible options of shadows and pores and skin deformities to the touch and power with out human annotation.

The staff broadened its coaching information assortment to incorporate 15 customers with completely different pores and skin tones and hair densities and gathered hours of knowledge throughout many conditions, actions and lighting circumstances.

EgoTouch can detect contact with greater than 96% accuracy and has a false optimistic fee of round 5%. It acknowledges urgent down, lifting up and dragging. The mannequin can even classify whether or not a contact was gentle or onerous with 98% accuracy.

“That may be actually helpful for having a right-click performance on the pores and skin,” Mollyn mentioned.

Detecting variations in contact may allow builders to imitate touchscreen gestures on the pores and skin. For instance, a smartphone can acknowledge scrolling up or down a web page, zooming in, swiping proper, or urgent and holding on an icon. To translate this to a skin-based interface, the digicam wants to acknowledge the delicate variations between the kind of contact and the power of contact.

Accuracies have been about the identical throughout numerous pores and skin tones and hair densities, and at completely different areas on the hand and forearm just like the entrance of arm, again of arm, palm and again of hand. The system didn’t carry out nicely on bony areas just like the knuckles.

“It is in all probability as a result of there wasn’t as a lot pores and skin deformation in these areas,” Mollyn mentioned. “As a consumer interface designer, what you are able to do is keep away from inserting components on these areas.”

Mollyn is exploring methods to make use of evening imaginative and prescient cameras and nighttime illumination to allow the EgoTouch system to work at midnight. He is additionally collaborating with researchers to increase this touch-detection methodology to surfaces apart from the pores and skin.

“For the primary time, we’ve got a system that simply makes use of a digicam that’s already in all of the headsets. Our fashions are calibration free, they usually work proper out of the field,” mentioned Mollyn. “Now we are able to construct off prior work on on-skin interfaces and truly make them actual.”

Extra info:

Vimal Mollyn et al, EgoTouch: On-Physique Contact Enter Utilizing AR/VR Headset Cameras, Proceedings of the thirty seventh Annual ACM Symposium on Person Interface Software program and Know-how (2024). DOI: 10.1145/3654777.3676455

Quotation:

AI-based instrument creates easy interfaces for digital and augmented actuality (2024, November 13)

retrieved 13 November 2024

from https://techxplore.com/information/2024-11-ai-based-tool-simple-interfaces.html

This doc is topic to copyright. Aside from any truthful dealing for the aim of personal examine or analysis, no

half could also be reproduced with out the written permission. The content material is supplied for info functions solely.