Be part of our day by day and weekly newsletters for the newest updates and unique content material on industry-leading AI protection. Be taught Extra

Researchers from UCLA and Meta AI have launched d1, a novel framework utilizing reinforcement studying (RL) to considerably improve the reasoning capabilities of diffusion-based giant language fashions (dLLMs). Whereas most consideration has targeted on autoregressive fashions like GPT, dLLMs provide distinctive benefits. Giving them robust reasoning expertise may unlock new efficiencies and purposes for enterprises.

dLLMs characterize a definite strategy to producing textual content in comparison with normal autoregressive fashions, probably providing advantages when it comes to effectivity and data processing, which could possibly be helpful for numerous real-world purposes.

Understanding diffusion language fashions

Most giant language fashions (LLMs) like GPT-4o and Llama are autoregressive (AR). They generate textual content sequentially, predicting the subsequent token primarily based solely on the tokens that got here earlier than it.

Diffusion language fashions (dLLMs) work otherwise. Diffusion fashions had been initially utilized in picture era fashions like DALL-E 2, Midjourney and Steady Diffusion. The core thought entails progressively including noise to a picture till it’s pure static, after which coaching a mannequin to meticulously reverse this course of, ranging from noise and progressively refining it right into a coherent image.

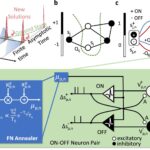

Adapting this idea on to language was tough as a result of textual content is manufactured from discrete models (tokens), not like the continual pixel values in pictures. Researchers overcame this by growing masked diffusion language fashions. As an alternative of including steady noise, these fashions work by randomly masking out tokens in a sequence and coaching the mannequin to foretell the unique tokens.

This results in a unique era course of in comparison with autoregressive fashions. dLLMs begin with a closely masked model of the enter textual content and progressively “unmask” or refine it over a number of steps till the ultimate, coherent output emerges. This “coarse-to-fine” era allows dLLMs to contemplate your entire context concurrently at every step, versus focusing solely on the subsequent token.

This distinction provides dLLMs potential benefits, reminiscent of improved parallel processing throughout era, which may result in sooner inference, particularly for longer sequences. Examples of this mannequin kind embrace the open-source LLaDA and the closed-source Mercury mannequin from Inception Labs.

“Whereas autoregressive LLMs can use reasoning to boost high quality, this enchancment comes at a extreme compute value with frontier reasoning LLMs incurring 30+ seconds in latency to generate a single response,” Aditya Grover, assistant professor of pc science at UCLA and co-author of the d1 paper, instructed VentureBeat. “In distinction, one of many key advantages of dLLMs is their computational effectivity. For instance, frontier dLLMs like Mercury can outperform the perfect speed-optimized autoregressive LLMs from frontier labs by 10x in person throughputs.”

Reinforcement studying for dLLMs

Regardless of their benefits, dLLMs nonetheless lag behind autoregressive fashions in reasoning talents. Reinforcement studying has develop into essential for instructing LLMs advanced reasoning expertise. By coaching fashions primarily based on reward alerts (basically rewarding them for proper reasoning steps or ultimate solutions) RL has pushed LLMs towards higher instruction-following and reasoning.

Algorithms reminiscent of Proximal Coverage Optimization (PPO) and the more moderen Group Relative Coverage Optimization (GRPO) have been central to making use of RL successfully to autoregressive fashions. These strategies usually depend on calculating the likelihood (or log likelihood) of the generated textual content sequence below the mannequin’s present coverage to information the educational course of.

This calculation is simple for autoregressive fashions on account of their sequential, token-by-token era. Nevertheless, for dLLMs, with their iterative, non-sequential era course of, immediately computing this sequence likelihood is troublesome and computationally costly. This has been a significant roadblock to making use of established RL methods to enhance dLLM reasoning.

The d1 framework tackles this problem with a two-stage post-training course of designed particularly for masked dLLMs:

- Supervised fine-tuning (SFT): First, the pre-trained dLLM is fine-tuned on a dataset of high-quality reasoning examples. The paper makes use of the “s1k” dataset, which accommodates detailed step-by-step options to issues, together with examples of self-correction and backtracking when errors happen. This stage goals to instill foundational reasoning patterns and behaviors into the mannequin.

- Reinforcement studying with diffu-GRPO: After SFT, the mannequin undergoes RL coaching utilizing a novel algorithm referred to as diffu-GRPO. This algorithm adapts the ideas of GRPO to dLLMs. It introduces an environment friendly methodology for estimating log possibilities whereas avoiding the expensive computations beforehand required. It additionally incorporates a intelligent method referred to as “random immediate masking.”

Throughout RL coaching, components of the enter immediate are randomly masked in every replace step. This acts as a type of regularization and information augmentation, permitting the mannequin to study extra successfully from every batch of knowledge.

d1 in real-world purposes

The researchers utilized the d1 framework to LLaDA-8B-Instruct, an open-source dLLM. They fine-tuned it utilizing the s1k reasoning dataset for the SFT stage. They then in contrast a number of variations: the bottom LLaDA mannequin, LLaDA with solely SFT, LLaDA with solely diffu-GRPO and the total d1-LLaDA (SFT adopted by diffu-GRPO).

These fashions had been examined on mathematical reasoning benchmarks (GSM8K, MATH500) and logical reasoning duties (4×4 Sudoku, Countdown quantity sport).

The outcomes confirmed that the total d1-LLaDA constantly achieved the perfect efficiency throughout all duties. Impressively, diffu-GRPO utilized alone additionally considerably outperformed SFT alone and the bottom mannequin.

“Reasoning-enhanced dLLMs like d1 can gas many various sorts of brokers for enterprise workloads,” Grover stated. “These embrace coding brokers for instantaneous software program engineering, in addition to ultra-fast deep analysis for real-time technique and consulting… With d1 brokers, on a regular basis digital workflows can develop into automated and accelerated on the identical time.”

Apparently, the researchers noticed qualitative enhancements, particularly when producing longer responses. The fashions started to exhibit “aha moments,” demonstrating self-correction and backtracking behaviors realized from the examples within the s1k dataset. This implies the mannequin isn’t simply memorizing solutions however studying extra strong problem-solving methods.

Autoregressive fashions have a first-mover benefit when it comes to adoption. Nevertheless, Grover believes that advances in dLLMs can change the dynamics of the taking part in discipline. For an enterprise, one technique to resolve between the 2 is that if their software is at the moment bottlenecked by latency or value constraints.

In accordance with Grover, reasoning-enhanced diffusion dLLMs reminiscent of d1 can assist in one in all two complementary methods:

- If an enterprise is at the moment unable emigrate to a reasoning mannequin primarily based on an autoregressive LLM, reasoning-enhanced dLLMs provide a plug-and-play various that enables enterprises to expertise the superior high quality of reasoning fashions on the identical velocity as non-reasoning, autoregressive dLLM.

- If the enterprise software permits for a bigger latency and price finances, d1 can generate longer reasoning traces utilizing the identical finances and additional enhance high quality.

“In different phrases, d1-style dLLMs can Pareto-dominate autoregressive LLMs on the axis of high quality, velocity, and price,” Grover stated.

Source link